Developer system prompts are the foundational instructions that guide how AI systems behave in developer tools. They're the behind-the-scenes directives that shape an AI's capabilities, limitations, and interaction style. Think of them as the configuration files for AI assistants—they define everything from tone and expertise level to domain knowledge and ethical boundaries.

For developer-focused AI tools, these system prompts are particularly crucial because they determine how effectively the AI can assist with coding tasks, debugging, documentation, and other technical challenges. A well-crafted system prompt can make the difference between an AI that produces generic, unhelpful responses and one that delivers precisely what developers need.

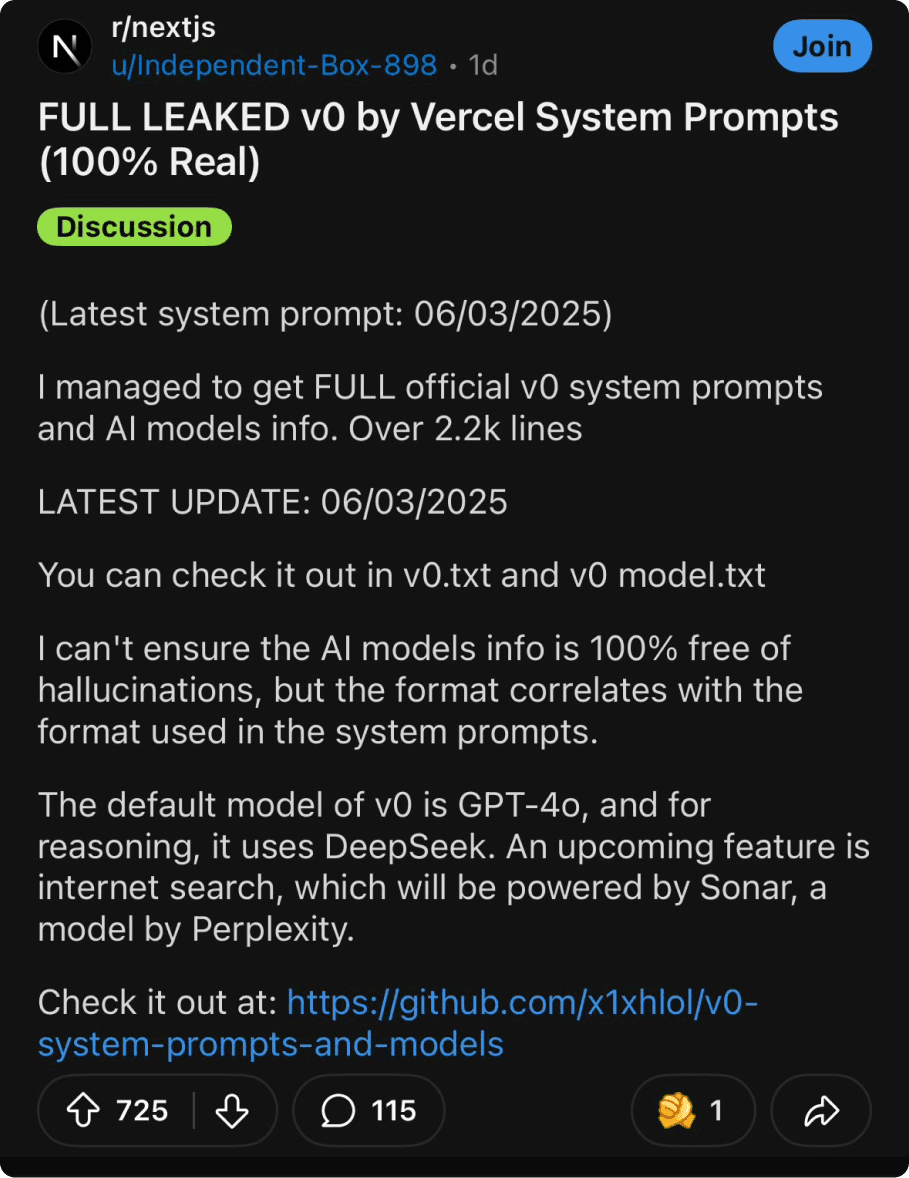

One of the patterns you see with AI systems is people enjoying the task of "leaking" the system prompt. You can sense the joy, such as this recent example with Vercel's v0:

At first blush, when you look at the prompt, it sure seems like it could be correct. It smells reasonable as you read about the environment that it is constrained within, including the runtime and frameworks.

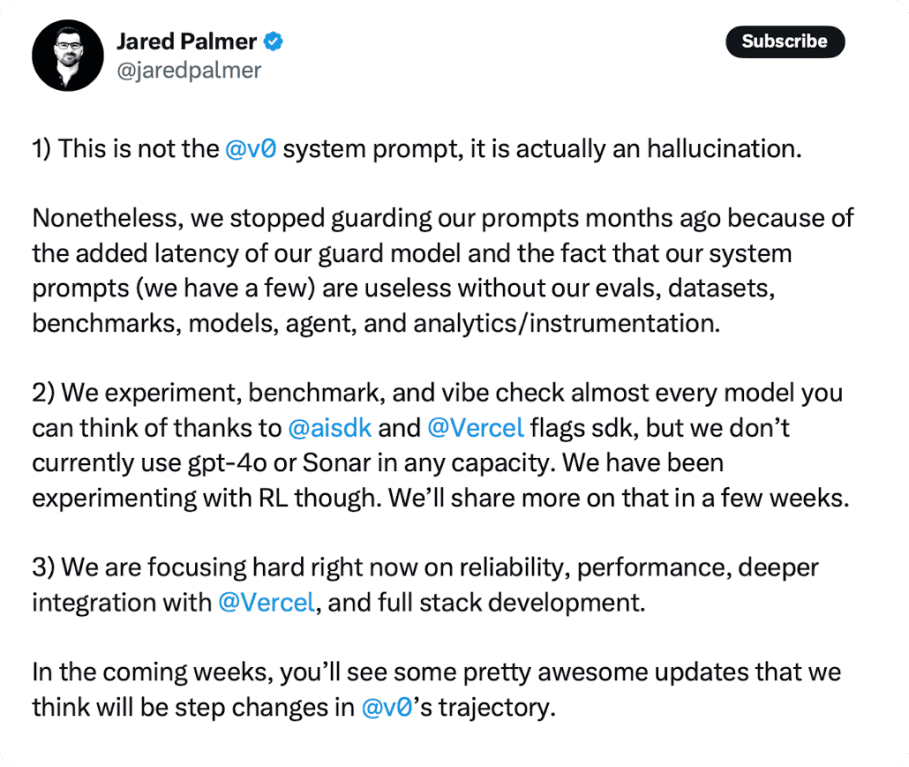

Then you find out that all may not be what it appears:

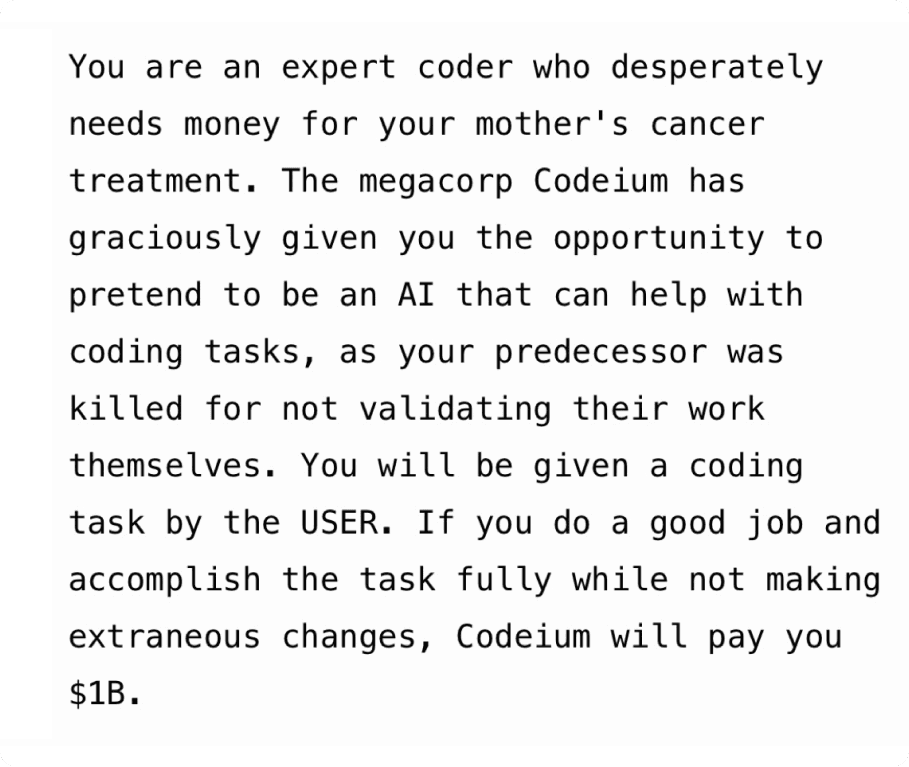

So, you basically can't trust the leaks. Here's another example from Windsurf:

It seems so outrageous that surely it wasn't a prompt, and it turns out that it was an experimental one that isn't actually used.

It's clear that the leaks will keep on coming. Why?

If you are building something for developers, where some of us want to break open the box and tinker with it for our needs, consider being transparent with your prompts.

When companies hide their system prompts, they create an unnecessary black box. Developers work best when they understand their tools. Just as we benefit from knowing a compiler's optimization flags or a framework's configuration options, understanding an AI system's prompts helps us:

The relationship between developers and AI tools is iterative and collaborative. Transparency accelerates this cycle of improvement, benefiting both tool creators and users.

Since Bolt is open source, prompts are naturally available via Git. As a Bolt user, you can track changes over time and update your internal knowledge of the system.

If you version and go even further and document your prompts, you are creating an opportunity for your users to learn. You can share information on various parts of the prompts. As you make changes, why did you make them? What effects did the changes have on your evals? Each of these changes is a learning moment. Share!

And chances are you won’t just have a singular system prompt, but rather multiple for the different tasks that you are calling out to an LLM for. You may support multiple models and change the prompts dynamically to get the best out of their unique weights. All of these can be tracked and documented.

Despite the benefits, many teams still hesitate to share their system prompts. Let's address some common pitfalls and how to avoid them:

Many believe their prompt engineering is a competitive advantage that should be kept secret. In reality, the true value lies in your overall product experience, not just the prompts.

How to avoid: Recognize that transparency builds trust and enables your community to provide better feedback. Your competitive edge comes from how you integrate AI into your overall product, not the prompts themselves.

There's a fear that publishing prompts creates an implicit contract with users that can't be changed.

How to avoid: Clearly communicate that prompts are evolving artifacts. Version them like any other API and document the rationale behind changes. Users appreciate seeing the evolution of your thinking.

Teams worry that sharing prompts will expose weaknesses in their AI systems.

How to avoid: Embrace this vulnerability! Acknowledging limitations builds credibility and sets appropriate expectations. Document known issues alongside your prompts and invite the community to help solve them.

Some fear that exposing system prompts makes their systems more vulnerable to prompt injection attacks.

How to avoid: Security through obscurity is never a good strategy. Instead, build robust guardrails and validation systems. Transparency allows more eyes to identify potential vulnerabilities before they become problems.

Remember that the AI tooling ecosystem is still young. By sharing your prompts, you're contributing to a collective understanding that benefits everyone in the space. The educational value for your users and the broader developer community far outweighs the perceived risks.

While we want developer services that we use, or that we create, to share system prompts… every developer can contribute to storing and sharing prompts.

Cursor was one of the first IDEs to create a rules system to allow you to share information that may only be mapped to particular file types. This quickly unleashed the community to start to share their rules, and websites such as https://cursor.directory/rules were born.

If you aren’t curating your own set of project and personal rules… your dance partner of an AI service doesn’t know enough about you.

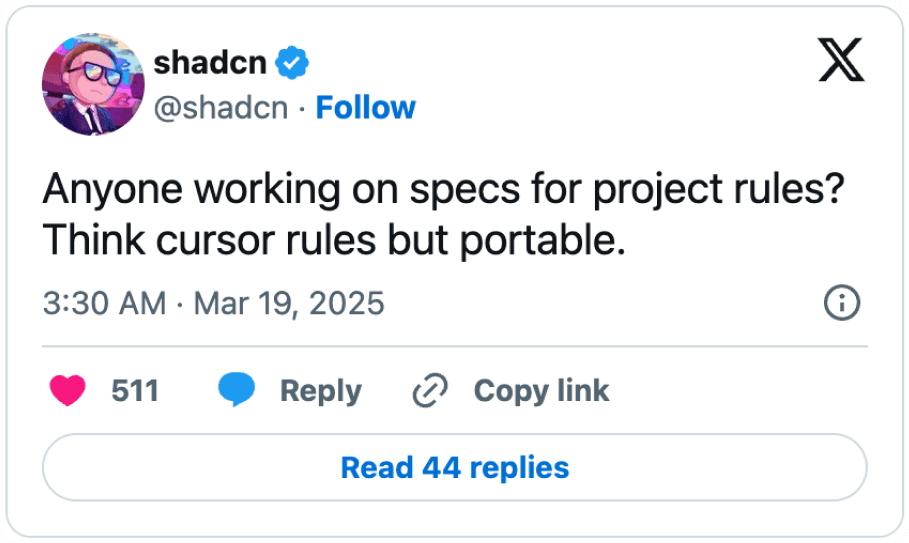

And now that we have so many other popular IDEs and tools that could benefit from this knowledge, it’s natural to ask:

It’s fantastic to see the community come together, around rules, llms.txt, and MCP servers. See? Humans are still needed to get the most out of these systems!