Open source, a free-tier, and checkpointing included

Google has upped the AI coding ante with the launch of Gemini CLI,a free and open source command-line tool that brings its flagship Gemini LLM directly to the developer’s terminal.

The release marks Google’s most direct push into the realm of terminal-native AI assistants, putting it in competition with the likes of Anthropic’s Claude Code and OpenAI’s Codex CLI, as well as smaller players like Warp.

While Google is a little late to the terminal-agent space, Gemini CLI arrives with a few distinct advantages as it looks to curry favour with coders.

The most immediate differentiator, one that generated much online chatter, is the cost – those who sign-in with their personal Google account are given a free license for Gemini Code Assist. This serves access to Gemini 2.5 Pro, which features a 1 million-token context window, and offers up to 60 requests per minute or 1,000 per day – not bad for free.

Of course, the idea is to suck developers in and up-sell to a more extensive plan via Google AI Studio or Vertex AI which has usage-based billing (or pay for an enterprise license).

And in truth, this could prove to be a limited-time deal for the preview period. But nonetheless, neither Claude Code or Codex offer a free usage tier at present. Warp does, but it’s limited to 150 requests per month.

As Stability AI co-founder Emad Mostaque noted, Google is well-positioned to use its advanced infrastructure and scale to “absorb losses” and push the cost of GenAI down, which puts real pressure on the competition.

Unlike Claude Code, Gemini CLI is fully open source, available under an Apache 2.0 license – Google is pitching this as a fully transparent, inspectable agent that developers can trust. It also means that users can modify and extend Gemini CLI with custom features and integrations.

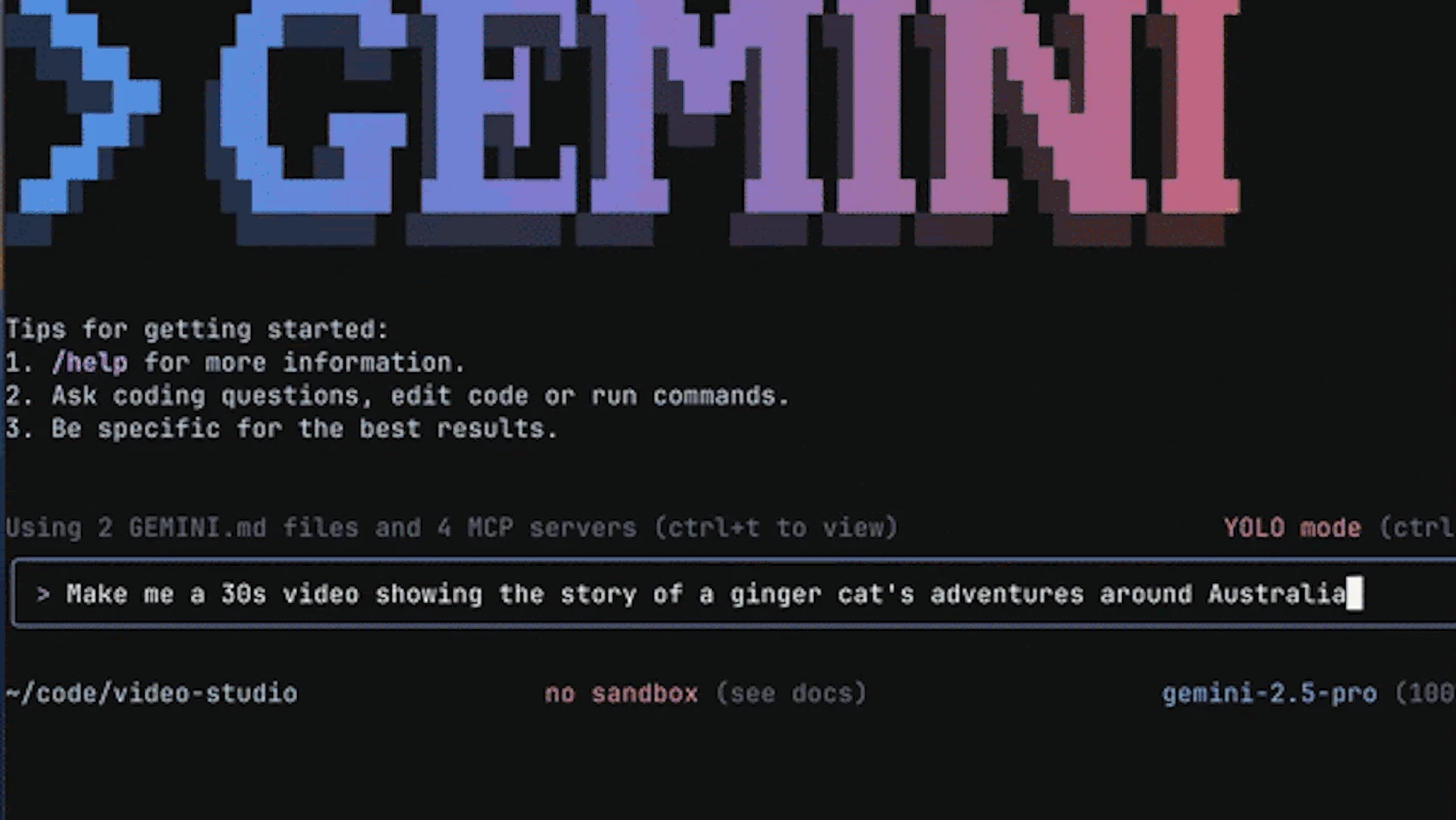

Gemini CLI ships with built-in support for the Model Context Protocol (MCP), the open source framework introduced by Anthropic to standardize how AI systems integrate with external systems and data sources. So, you can ask Gemini to analyze your entire codebase, query databases, and interact with APIs through natural-language prompts.

How to Install & Use Gemini CLI + MCP: A Step-by-Step Tutorial

Gemini CLI also supports system prompts (via GEMINI.md), which can be used to define the agent’s role, behavior, goals, and constraints – this means developers can tailor the assistant to their specific workflows, coding standards, and team conventions.

It’s worth noting that OpenAI’s Codex CLI is also open source, which could be one of the reasons why Google is being aggressive on pricing – it lures users in to build and experiment without worrying about cost.

However, Gemini CLI is setting itself apart in other notable ways. For instance, it supports checkpointing, meaning you can save and restore the state of your AI sessions, which is crucial for managing large or multi-step coding tasks that exceed token or time limits.

Of course, Gemini CLI is tightly integrated with Google’s own models, whereas something like Warp supports models from multiple providers out of the box (including Gemini) under a unified interface – this might be more appealing to those seeking options.

But on the flip-side, tight integrations with Google’s suite of AI models means that users can access its array of media-generation models spanning music, video, and images.

Want to create a video-story of a cat traveling the world from your terminal? Well, now you can.

Last but not least, unleashing an AI agent into a terminal environment isn’t without its risks. Which is why Google has baked a sandboxing feature into Gemini CLI. To enable sandboxing, developers can add the --sandbox (or -s) flag to their terminal command, set the GEMINI_SANDBOX environment variable, or use the --yolo mode, which enables sandboxing by default.