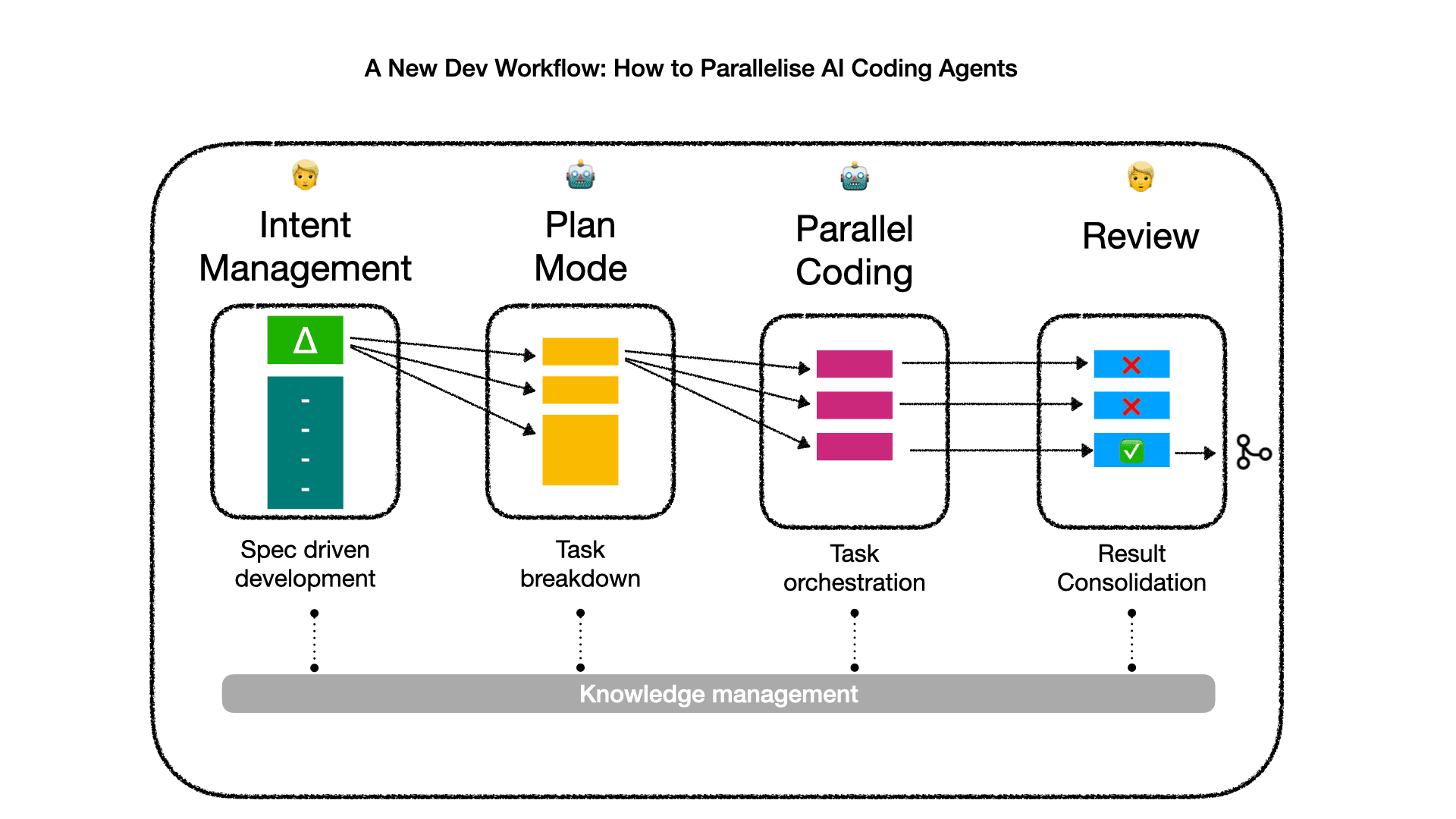

AI coding environments have evolved rapidly, from simple chat-based prompting, to retrieval-augmented generation (RAG), and more recently to autonomous agents. Each step has improved output quality, but also introduced new workflow patterns. Agent-based coding, for instance, often means longer execution cycles: the agent works autonomously for a while before returning for human feedback.

This shift resembles the transition from a synchronous for-loop to an asynchronous, event-driven architecture. Instead of one AI working step-by-step, we now have multiple parallel coding agents operating independently and reporting back—bringing both speed and complexity.

Pro tip: this post is the result of lots of research and therefore link heavy, do explore them as they contain many extra gems..

The first reason is that we want to speed up the coding process. The idea is that we can complete multiple tasks in parallel and then combine the results. This is pretty much how human product management planning works: we have different developers work next to each other on different features and merge them back in.

But speed isn’t the only reason. Another benefit, perhaps even more important, is the ability to explore divergent ideas in parallel. As humans we give multiple people or teams the same task and have them use different strategies. This helps in the innovation process and also in a better understanding of the solution space.

One of the first appearances of “choice of options” was in gpt-engineer (now Lovable) generating multiple generations of UIs. We captured this pattern in the 3rd AI native dev pattern: “From delivery to discovery”, where we explain that delivery is automated anyhow, so we can focus on discovering new ideas..

To parallelize, we need to break large specs into atomic tasks that agents can work on independently. This is where reasoning agents act as architects and planners.

We are seeing task management appear as a core function inside of code generation tools:

Once the build and code tasks are done, our human job begins again. We now have multiple task results that need to be reviewed!

People are trying to figure out how this changes the code review process:

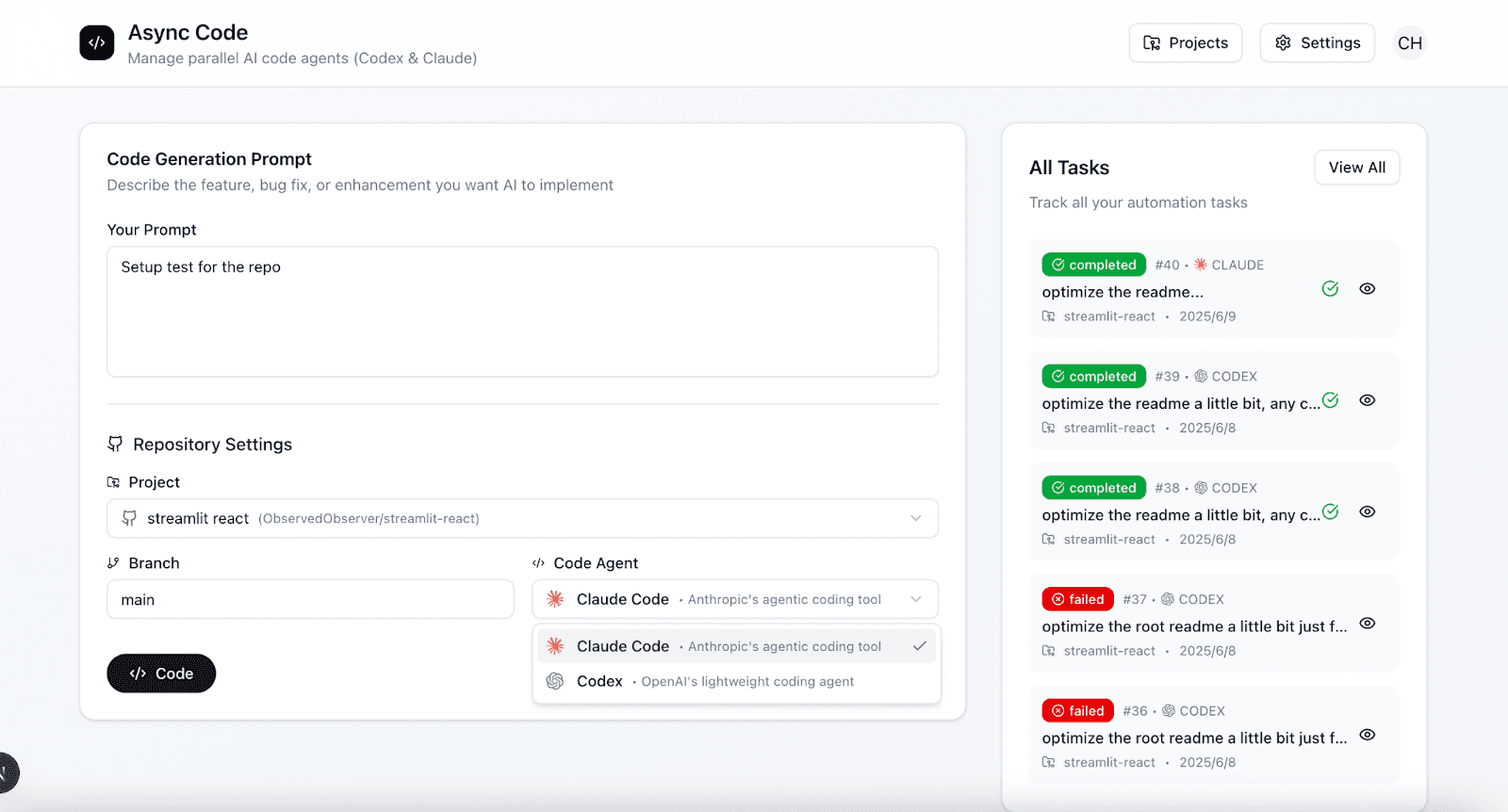

UI example of Async Code Agent

So we end somewhere with a mix of tabs and code diffs, with some using color coding to label different environments.

A few emerging tools are attempting to manage this workflow complexity: Async Code Agent, Crystal, CCManager, SplitMind, Claude squad, Claude Code crew with their own new UI workflow.

Another approach is opening the IDE from the terminal loop when it needs more feedback like the VSCode plugin for Claude code.

Additionally, this begs the question: can we automate the review too? I haven’t seen any specific implementations on this that were integrated, but I can imagine all the CI/CD review tools could help us here too. From AI engineering we can definitely borrow the LLM as a judge concept. Now next to the specification for building the app, we need to specify examples and guidelines to review the application and changes.

Let’s be honest—parallel agents aren’t without danger: we all know that LLMs and agents can get things wrong and, given the wrong access, can do bad things such as removing everything on your computer. Yet, if we want to delegate the tasks we are “forced” to trust and just accept that agents have a lot more access. Otherwise we always have to be in the loop to approve the steps of the agents. That’s why you see people enable dangerous-settings and YOLO mode. This also makes it clear that we have to reduce the possible blast radius of this.

A human developer typically picks up tasks and code in their own environment. For agents, that means we have to give them their own development environment too. Let’s walk through the spectrum of workspace isolation strategies: from chaotic to cloud-native.

| Workspace Strategy | Isolation | Mergeability | UX Impact |

|---|---|---|---|

| Multi-IDE Windows | Low | Manual | High |

| Git Worktrees | Medium | Easy | Medium |

| DevContainers/Cloud | High | External | Low |

The first way I saw people trying to parallelize their environment was spinning up multiple IDEs and pointing them to the same codebase. This was shown in echohive’s video: “multiple Cursor Composer and Windsurf Cascade agents”: This creates a lot of windows and becomes chaos on the screen. You also need a clear strategy for directory partitioning to avoid having them all writing to the same place.

This is why people started moving towards headless execution or terminal-based execution of the coding.

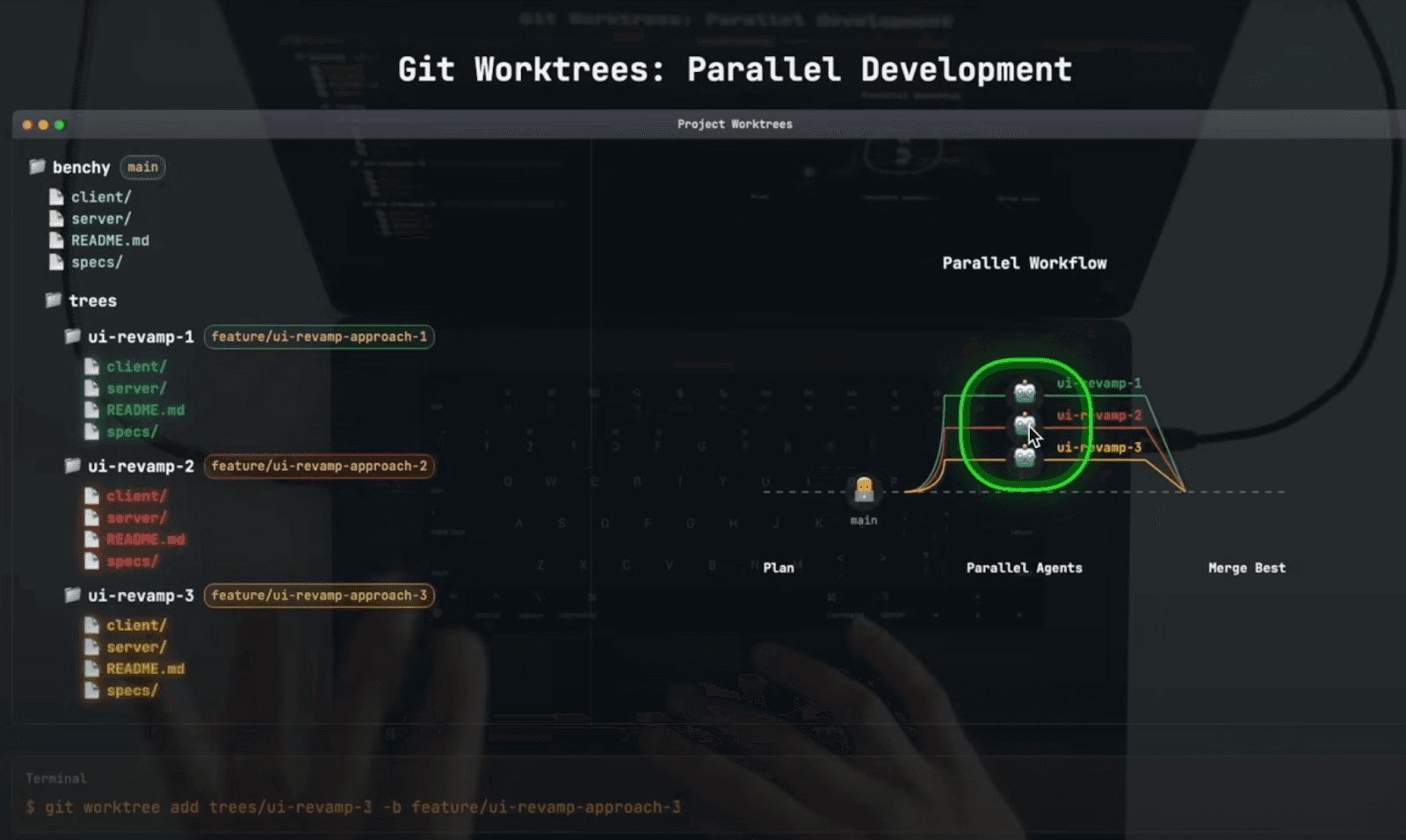

Now you could copy the files, but what you want is to actually copy the repo with its version control, because that will allow you to merge the results back in later. Git actually has an integrated mechanism for this called git worktrees. It allows you to clone and branch a git repository into another directory. The first video I saw it in action was in Indydevdan’s video: “Claude 4 ADVANCED AI Coding: How I PARALLELIZE Claude Code with Git Worktrees”

Indy Devdan Youtube: Claude 4 ADVANCED AI Coding: How I PARALLELIZE Claude Code with Git Worktrees

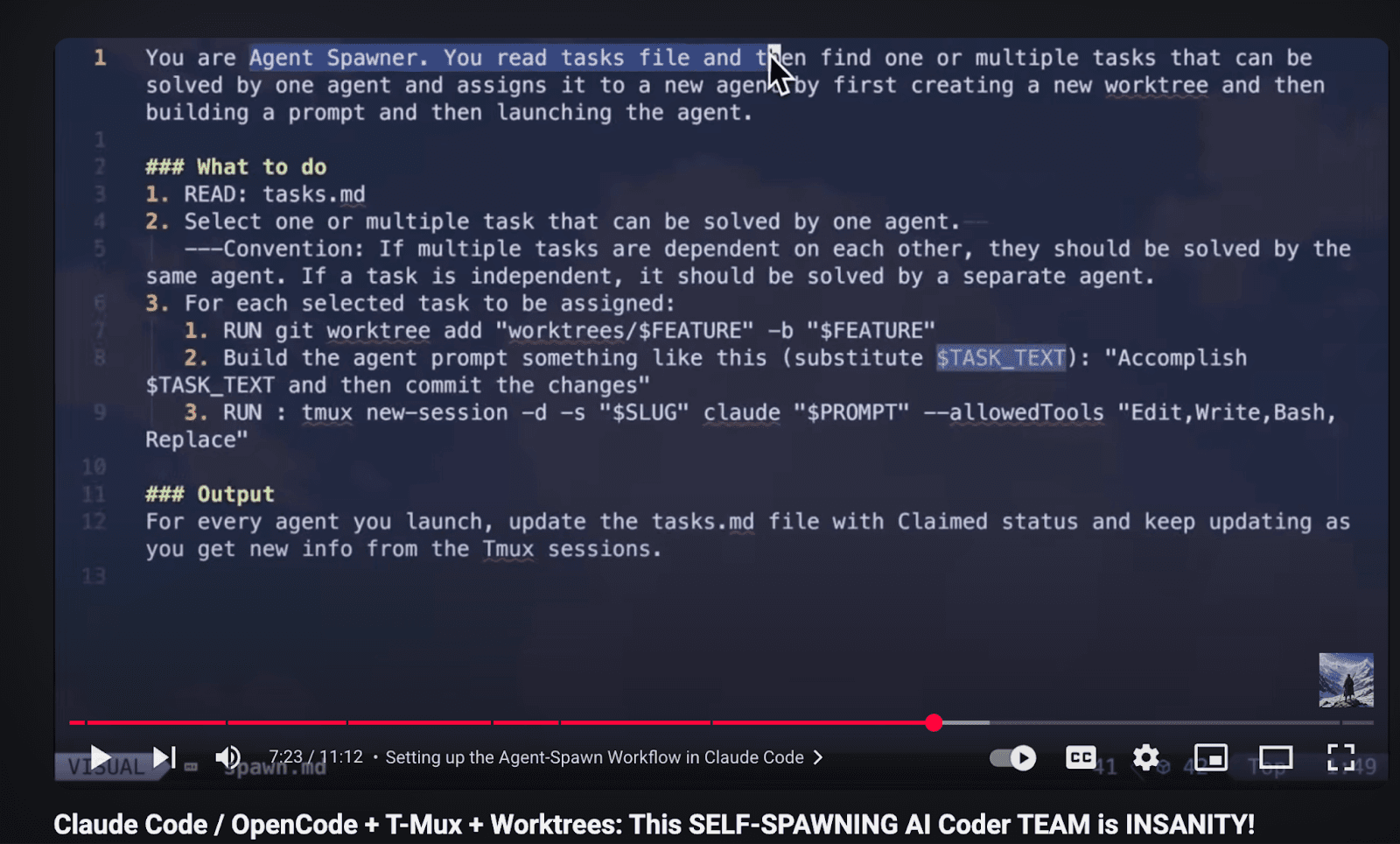

This mechanism was also described on the Claude code best practices page (scroll down). In addition, Claude Code has the ability to spawn sub agents and just by describing the parallel process in markdown files people had this parallel loop working.

People documented their experiences with this novel workflow that are well worth a read:

We also saw many tools emerging around this new workflow (note: many are experimental) such as Uzi, AI - fleet, Claude flow and Claude simone.

Within CI/CD systems, we are typically using containers to contain builds. Or we can use devcontainers for local machine isolation. We can apply this for agents too: we all give them their own machine, keeping our local machine clean. Additionally, this allows us to scale the machine power via the cloud and support more complex tech stacks.

We see this happening in the First look at Cursor background agents (multiple agents in the cloud: spawns a container and pulls from the github repository and then reports back. It is also recommended by Claude to run your agents using a container or in devcontainer (see this Claude config for it).

Therefore it should not come as a surprise that one of the former founders of Docker is working on an optimized workflow of spanning up containers and merging remote agent work back. You provide the container-use as an MCP server to the agent and it spawns off multiple containers. This is also now used by frameworks such as Claude parallel work.

Create 2 variations of a simple hello world app using Flask and FastAPI. each in their own environment. Give me the URL of each app

This would spin up two containers, have them pull the existing repo, start coding and then start the preview version of the app.

Parallel execution isn’t enough. We also need shared memory so agents don’t reinvent the wheel every time: when the system learns things, we want it to share the common knowledge. This can improve future runs of the agents.

A first crude form of this was agents updating a shared markdown file as “shared” memory. We are starting to see the first signs of this being externalized to a shared memory service. This knowledge is another artifact that the agents produce and that we need to approve and review.

We need to make sure that the knowledge is up to date and not conflicting, much like the specifications and requirements. Diversification of knowledge can also be fostered by using different models for different tasks, here each model is going through a parallel thought process in addition to parallel threads for coding.

What if we think we can put the different varieties in front of customers for feedback?

In CI/CD, the testing and review happens on the build systems. Now we can have these build systems as part of our agentic coding. We still have to work out how multiple developers and their own set of developer agents will collaborate and merge, but we can already shift part of the process left.

It feels quite similar to parallel feature branches, or for end users feature flags. It’s still early days, and we are taking our first steps from “auto-committing” to “auto-deploying” and the parallel exec actually will help the reproducibility and sharing of results.

These parallel execution frameworks may lay the groundwork for Continuous-AI — where iteration, learning, and deployment form one seamless loop. So this isn’t just Continuous Integration anymore, it’s Continuous Imagination.