We, as an industry, are trying to work out where things land after the tectonic shifts of AI, and how to thrive as an AI Native Developer.

From one side we hear fearful messages that the robots will do all of the development, but then we remember that writing code != software development and we think about how much higher quality software can be created by us and our teams. We think about how team size may change, hopefully in a way that enables agility. But, it's so hard to predict.

Richard Sutton, famed for his work in the field of Reinforcement Learning, wrote The Bitter Lesson detailing the learning that scalable computation and general-purpose algorithms often surpass those built with extensive domain-specific insights. It's the type of article that you bookmark and actually come back to. Richard works at Google DeepMind, where this was learned first hand in the evolution of Alpha Zero, where the "Zero" signifies how the computer would start from scratch, without human knowledge. Astonishingly, with the ability to play itself millions of times in games like go and chess, Alpha Zero could actually learn novel strategies and beat the best humans.

Richard is back with another tour de force, joined by David Silver (VP of RL at Google DeepMind): The Era of Experience. Here the pair expand on the bitter lesson, observing that AI’s recent successes (like large language models) came from training on vast human-generated data, and went further, explaining that to continue progressing, AI agents must learn from their own experience by interacting with environments.

How can we use this type of scale as developers?

How many hours do you have in a day to do productive work? After sleeping, spending time with friends and family, eating, having fun, being stuck in meetings, doing performance reviews... it all gets chipped away doesn't it? We need to get the most out of the time we do have, and we need to use computers' scale while we are doing these other things.

I often follow a pattern such as this:

It's vital to have tools that can scale with the size of potential context. This includes balancing models that can intake larger amounts of context in a way where they don't lose the thread doing so, with context systems that can grab exactly the right information for the models. I have bias here having worked there, but I'm a big fan of Augment Code for this reason. Marrying an amazing context engine with the top models delivers great results!

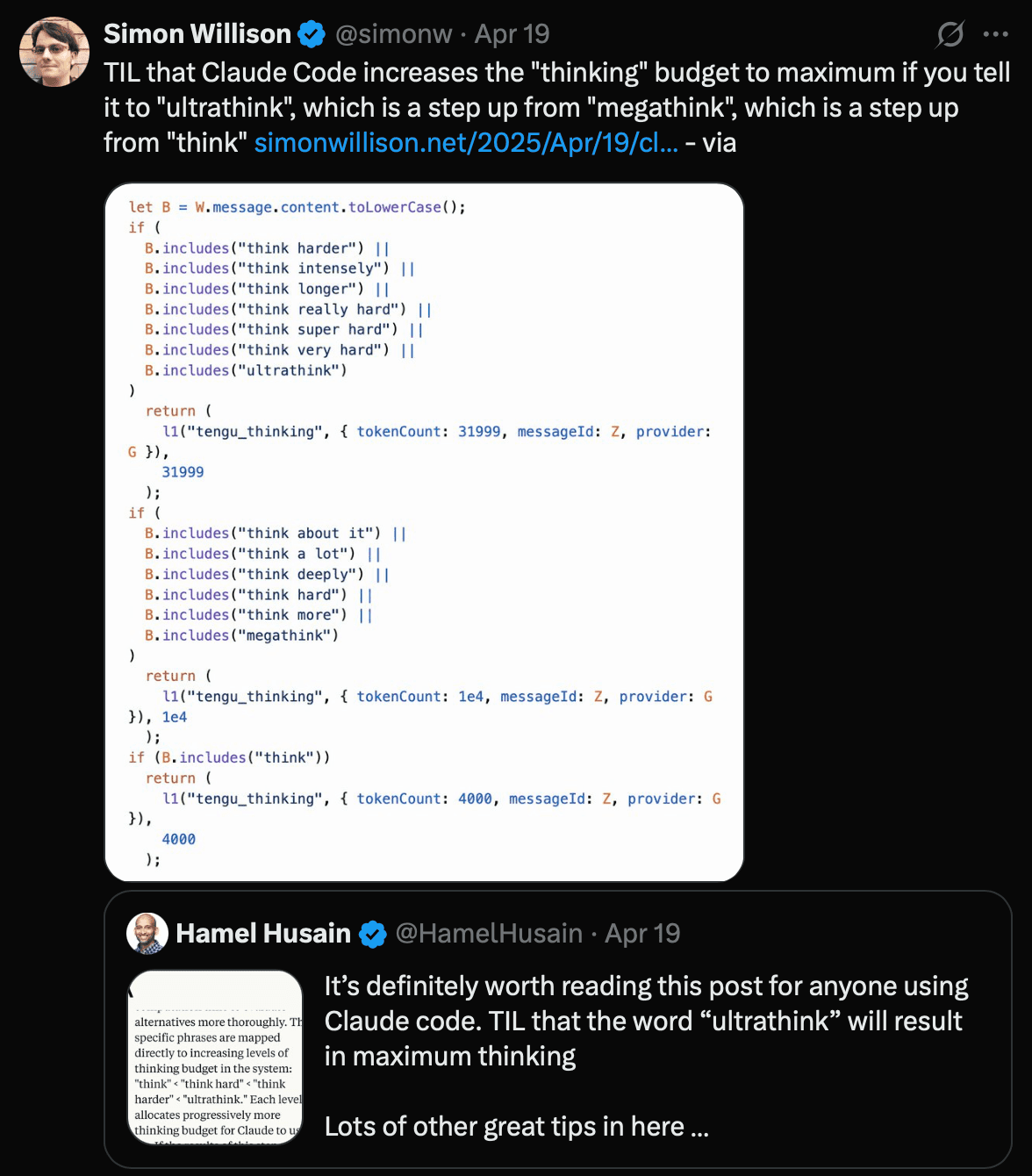

It turns out that Claude Code changes the thinking budget based on certain words… including megathink and ultrathink!

For some time we were ramping up the scaling of training data and compute, but then we saw a change with test time scaling, or allowing the models to "think" more.

It can be daunting to juggle context length, model size, and reasoning time—but systems are starting to manage that for you. Think of it as a compute budget, they’ll allocate just enough horsepower for the task at hand, without over-engineering what a smaller model could handle more efficiently (case in point: Gemini 2.5 Flash).

We need to think differently, and embrace the abundance we all now have as AI Native Developers.