8 Aug 20256 minute read

8 Aug 20256 minute read

Stack Overflow's 2025 Developer Survey, featuring over 49,000 developers from 177 countries, reveals an intriguing paradox in the AI revolution: while 84% of developers now use or plan to use AI tools (up from 76% in 2024), trust in these tools has significantly dropped.

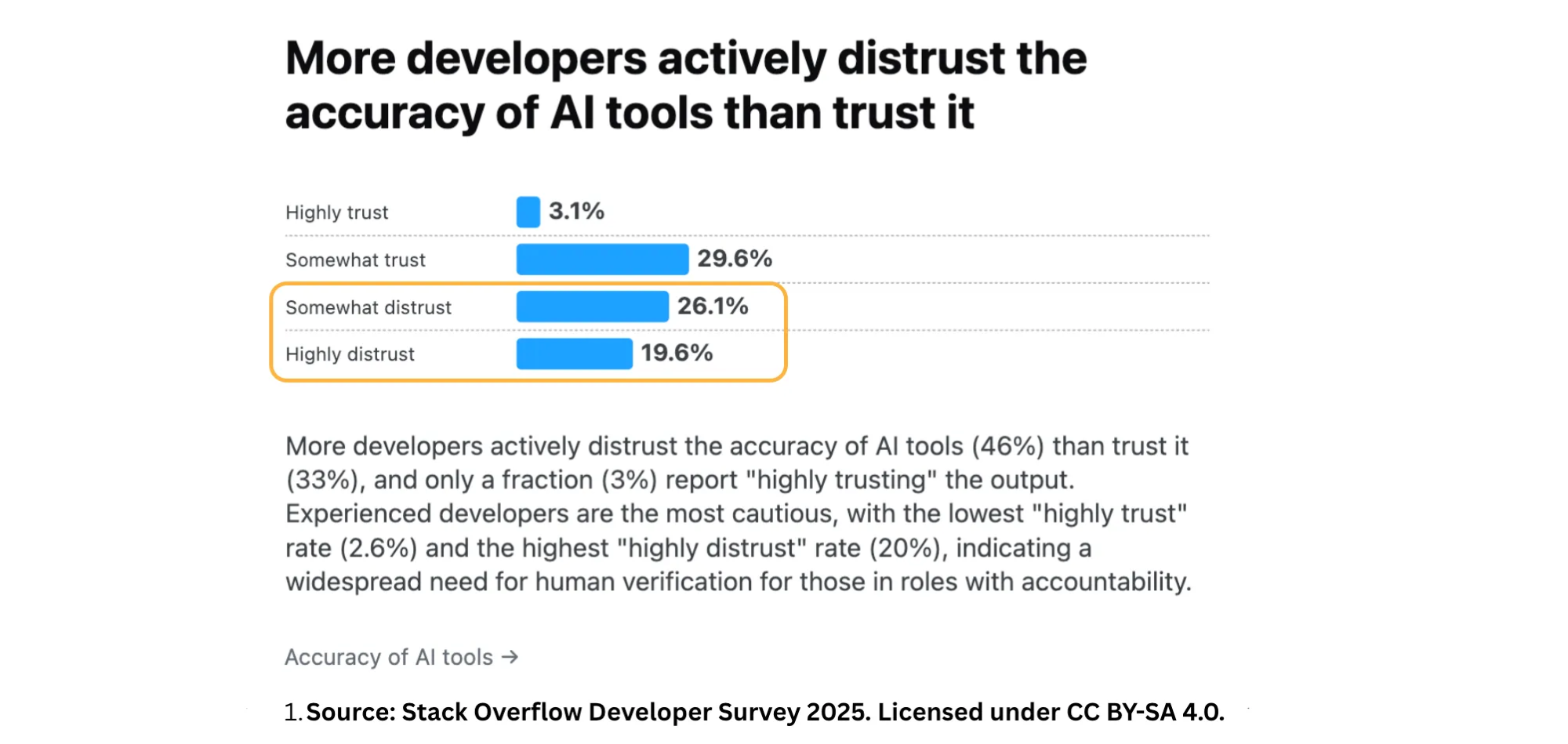

Trust in AI accuracy has fallen sharply: only 33% of developers now trust AI-generated output, down from 43% in 2024.That said, there’s a quote worth remembering: “Today’s AI is the worst you are going to get.” So while trust may be declining, the pace of progress means those losing faith might want to revisit their assumptions sooner rather than later.

Perhaps most telling, 66% of developers report frustration with AI solutions that are "almost right but not quite," often spending more time debugging AI-generated code than writing it themselves. Stack Overflow CEO Prashanth Chandrasekar emphasized that the "growing lack of trust in AI tools stood out" this year, highlighting the need for a trusted "human intelligence layer" to counterbalance inaccuracies.

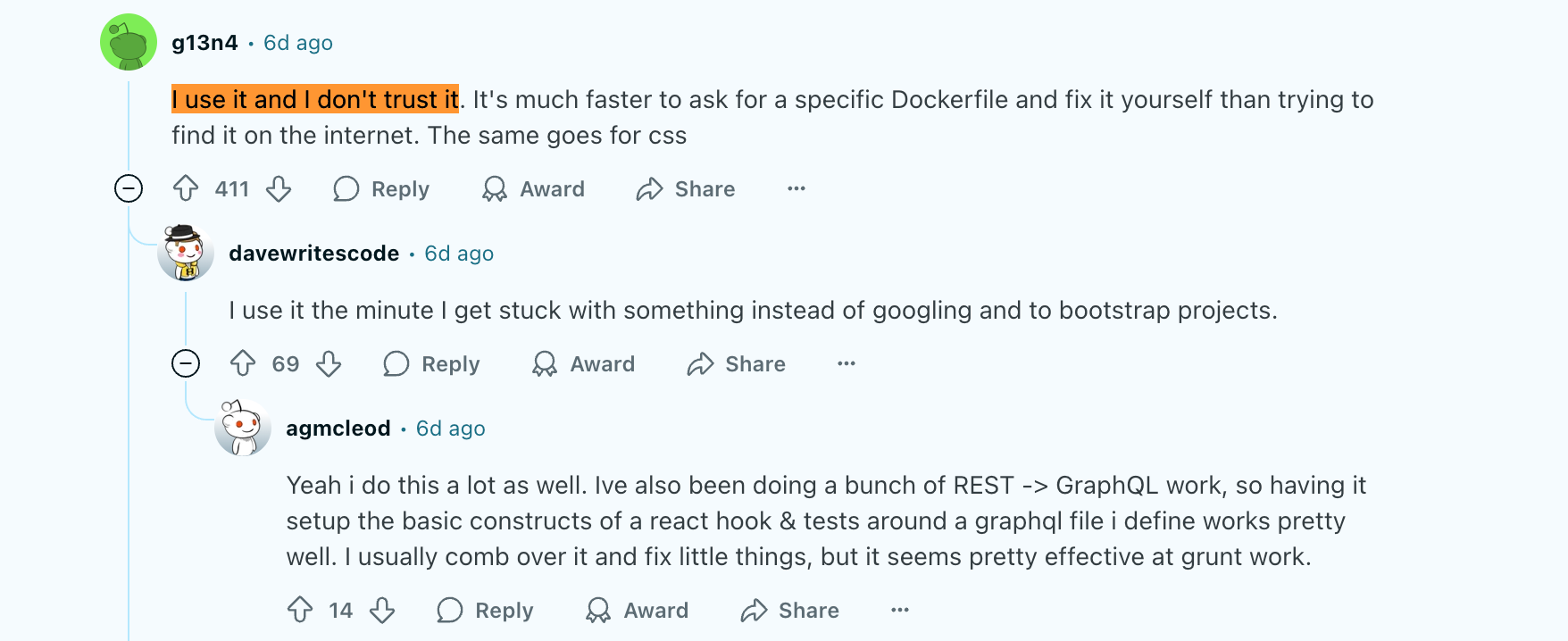

The developer community largely resonates with the survey results, noting that the findings align closely with their own experiences, with some highlighting that the data appears relatively consistent with trends observed in last year’s report. Across forums and social media, programmers also echoed that AI tools are useful – even indispensable – but it wouldn’t be wise to trust them blindly.

A more nuanced view is that trust in AI code gen is context-dependent. "Do I trust AI for a full agentic build? Absolutely not. Do I trust it to scaffold a frontend validation schema? Generally, yes - but I will verify."

"LLMs get a bad rep because of individuals who completely outsource thinking."

— HN

The community also acknowledges that although the survey suggests that 69% of AI agent users agree AI agents have increased productivity”, the rise of AI first development have at times slow down productivity. Critically, productivity concerns transcend experience: multiple studies, including recent METR research, suggest even experienced developers are 19% slower when relying on AI tools.

Ultimately, it it all depends on the tools being used, the coding languages and codebase complexity; which is not taken into account in this survey numbers. Together, these insights point to a core truth: the value of AI in development hinges not just on capability, but on how precisely and intentionally it’s integrated into the workflow.

The issue isn't that people don't trust AI - they do. What they don't trust are the current methods AI uses to generate code, particularly "vibe coding" approaches. As we attempt more complex development tasks, we're consistently hitting the limits of these methods.

This creates a predictable cycle: AI improves, we find new limits. Tools evolve, we discover fresh constraints. The critical question isn't whether AI can help with development - it's whether we can push AI-assisted development into professional, production-ready environments.

The current trust setback should thus accelerate more sophisticated integration patterns, where AI code generation's strengths and limitations are explicitly recognized as core design considerations rather than implementation afterthoughts. For instance, instead of letting AI generate an entire backend service ad hoc, teams might begin designing modular interfaces where AI is only responsible for generating testable utility functions within a predefined contract.

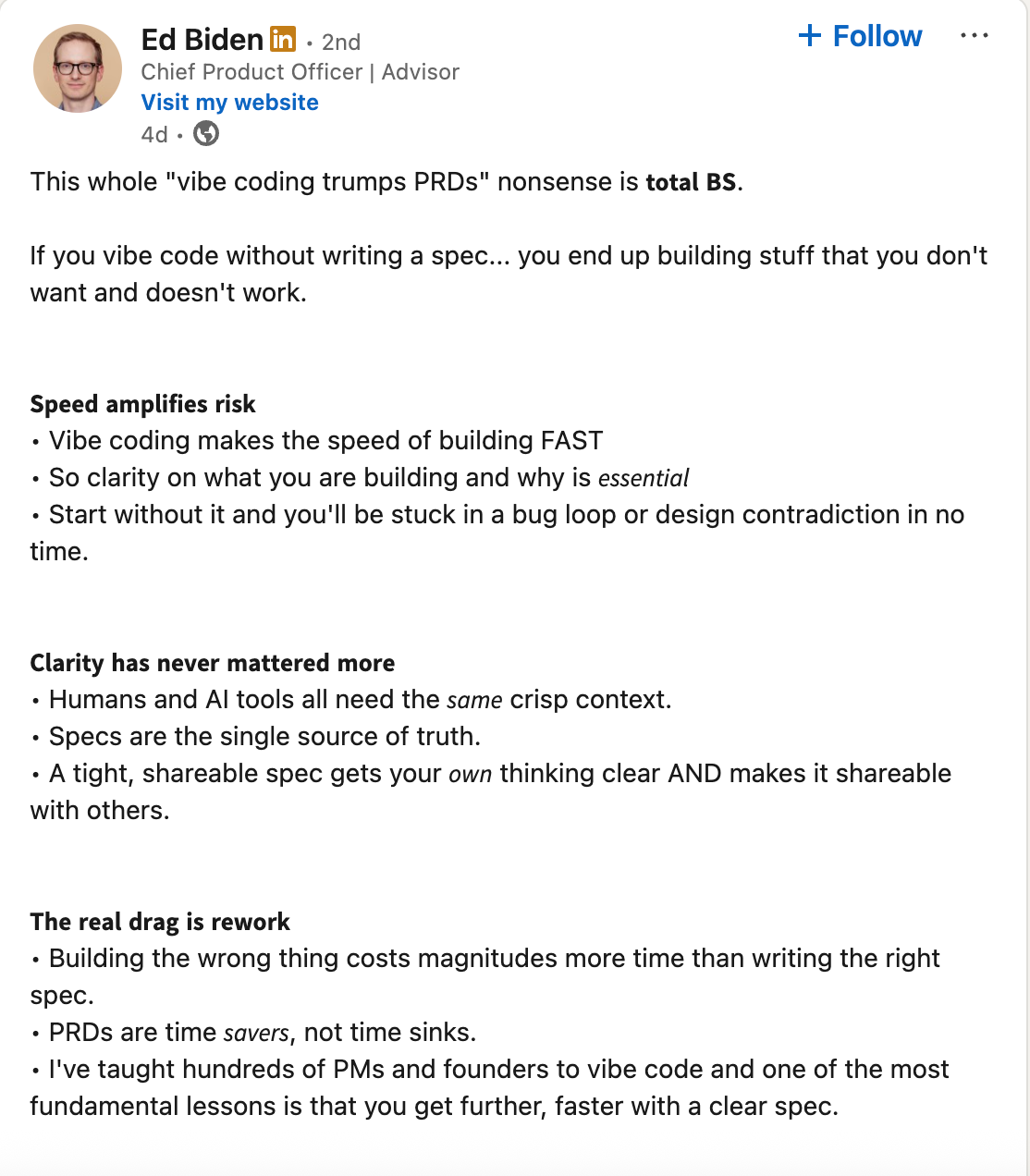

A robust future for AI native development likely includes clear guardrails and a test-driven approach, as embraced by our community advocating for a spec-driven development.

Alignment between intent and AI output is emerging as the key challenge, surpassing simple code generation. Specs act as the anchor, offering structured clarity for both AI reasoning and human validation. They provide a shared, testable source of truth crucial in managing AI-generated content's inherent unpredictability. In a world where AI confidently delivers almost right solutions, specs help safeguard against subtle yet significant errors.

While AI Native development is advancing rapidly, its ultimate form will likely be nuanced. This year's survey offers devs a practical reality check, underscoring the need for thoughtful integration, realistic expectations, and human oversight as AI becomes more central to development practices.