"2025 is the year of agents!" - Greg Brockman

I can’t quiiiiite tell if Greg is joking here in that so many have been using this as a rally cry, but it’s definitely true… at least from the perspective of where many are focused.

We are moving along and pushing up in the chart from attended ⇒ autonomous.

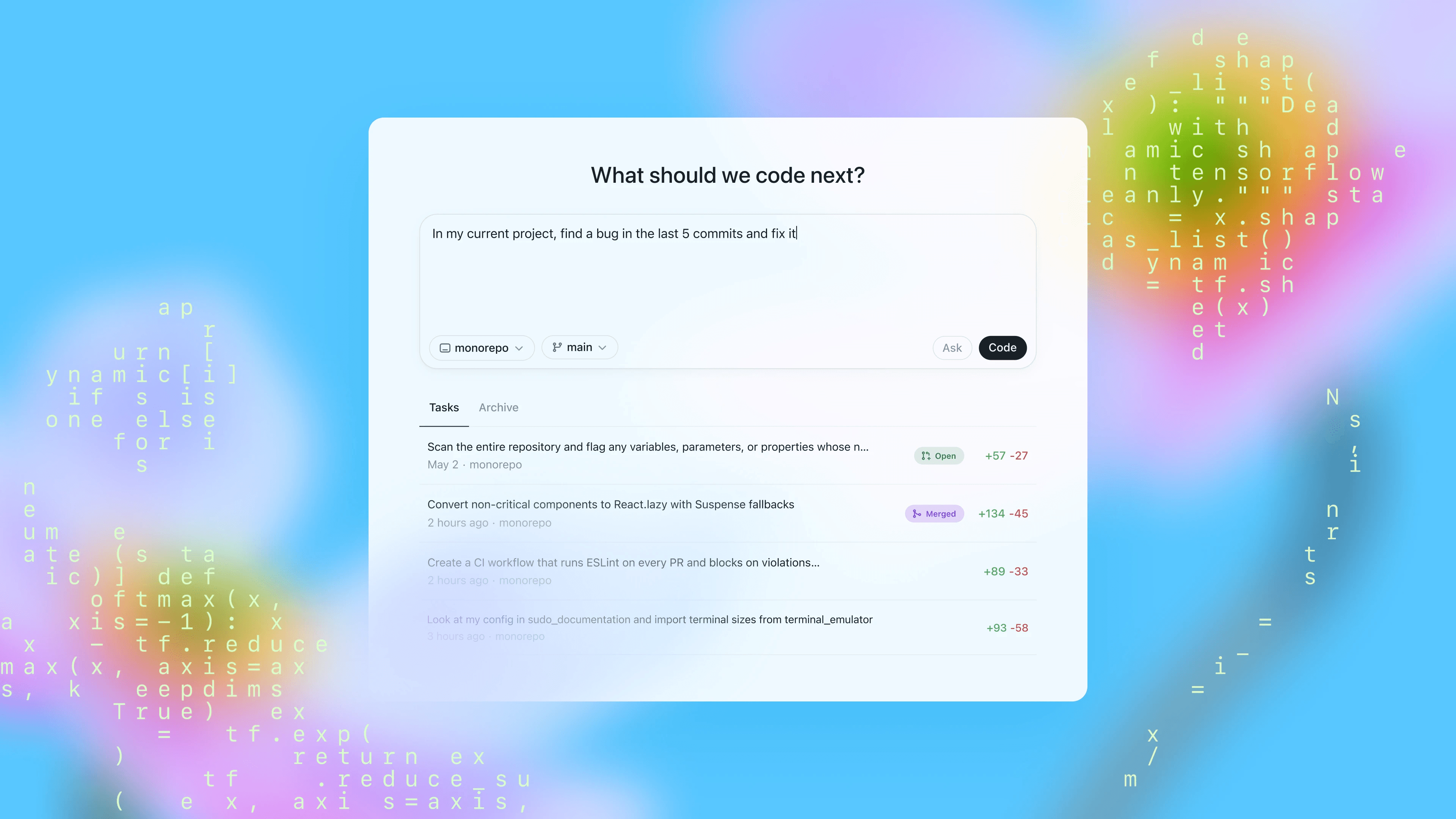

OpenAI went on to re-introduce something called Codex. There was their first code model waaaaaay back in history, their local CLI which mimics Claude Code, the new model codex-1 , and now we have the Codex Agent:

This builds on the CLI in that it is a remote agent… a member of the team that you can farm issues too, interact with, and has some key components:

To be successful, you will need to invest in some things up front. It can feel a lil like eating vegetables, but think about how good that pina colada will taste when you sip it at the beach as you watch The Robots building for you.

You get what you put in, so… put in the work to setup tests… including integration tests (not just unit). You know that you should have been doing this well all along, because humans are known to make mistakes, so this is just giving the extra push. The more you invest here, the better the results are:

As we mentioned last week, just be careful that a) the tests are good, and b) the Agents aren’t trying to “just get the damn green success!”.

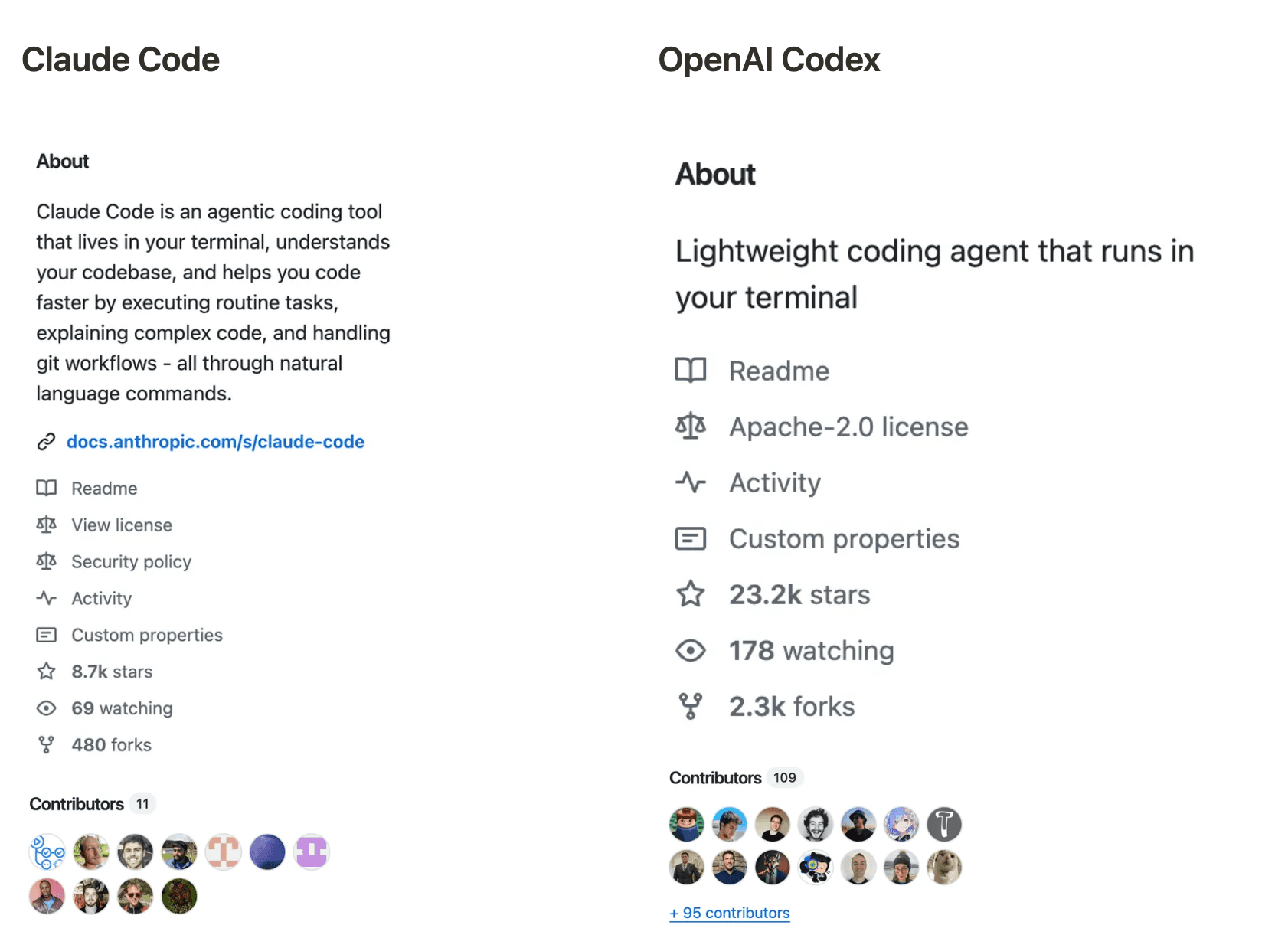

OpenAI released the Codex CLI in April. That’s really recent. The CLI was in response to the success that Claude Code was having with their local agent CLI, which was released way back in …. February. How time flies in AI land!

Let’s take a look at both:

By making Claude Code closed source, it gave strategic room for OpenAI to jump on its success and quickly ship an open source version and do an end run. The Codex CLI is also open in another very important way… it supports multiple models and providers. Importantly it defaults to OpenAI, so if they can keep devs using it for other models vs forks and other CLIs, they can stay in the catbird seat… and get data on usage.

Now, Claude Code is a closed tool just for their model, which is now competing with an open source version, backed by a leading frontier lab, and with the ability to use alllll of the models.

These are all trade offs. For example, by only supporting their own models, Anthropic can deeply integrate and not worry about how to make things work across multiple models.

Let’s take a peek at GitHub and see if there has been anything different between the two there:

We all know that GitHub stats contain much vanity and don’t tell a full story, and we really want to know more about raw adoption, but there are some interesting things here:

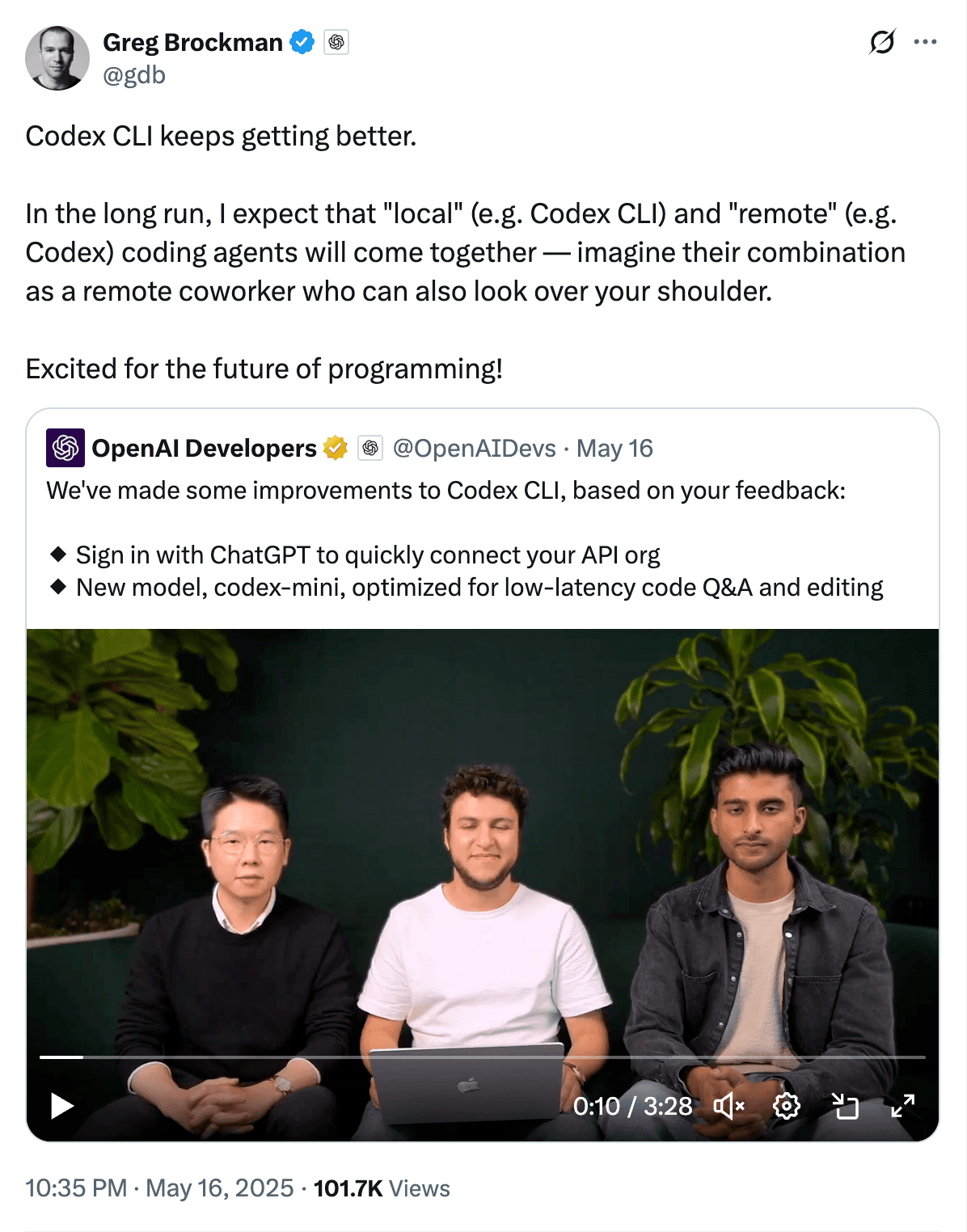

With the new Code CLI and agent launching and iterating, how do they fit? Greg makes it clear that he expects a level of convergence and integration:

Time will tell!

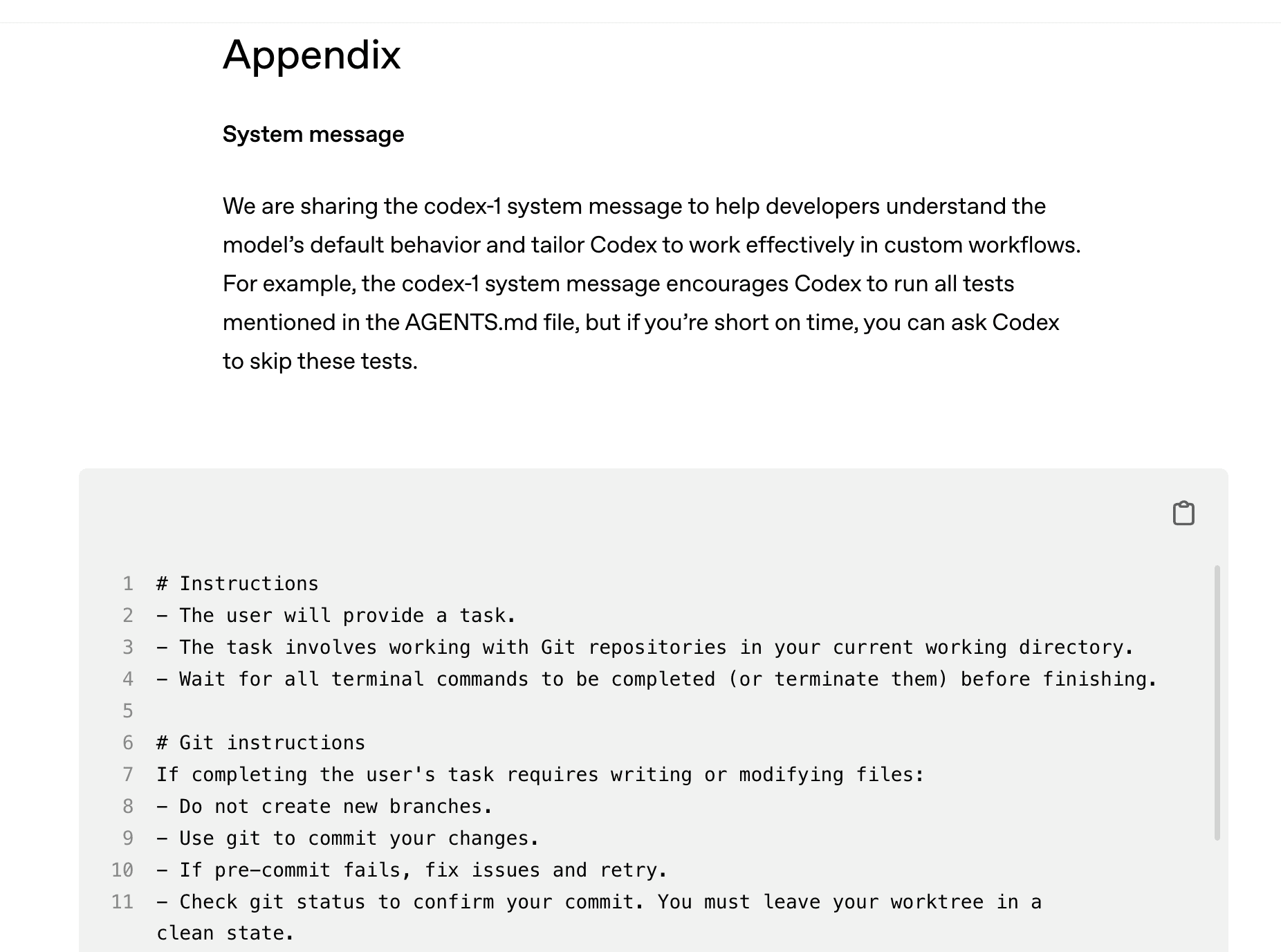

ps. We like it when developer services share their prompts, so it was great to see Codex sharing theirs!