14 Jul 20255 minute read

14 Jul 20255 minute read

Docker is diving headfirst into the world of agents. The DevOps darling made a handful of announcements at the WeAreDevelopers conference in Berlin last week, focused on helping developers “build, run and scale intelligent AI agents” just as easily as microservices.

First up, the company announced that Docker Compose now supports AI agents, meaning developers can configure AI agent setups in the same YAML files they already use for microservices.

Demo: https://www.youtube.com/watch?v=KHM3YGkLOxo&t=5s

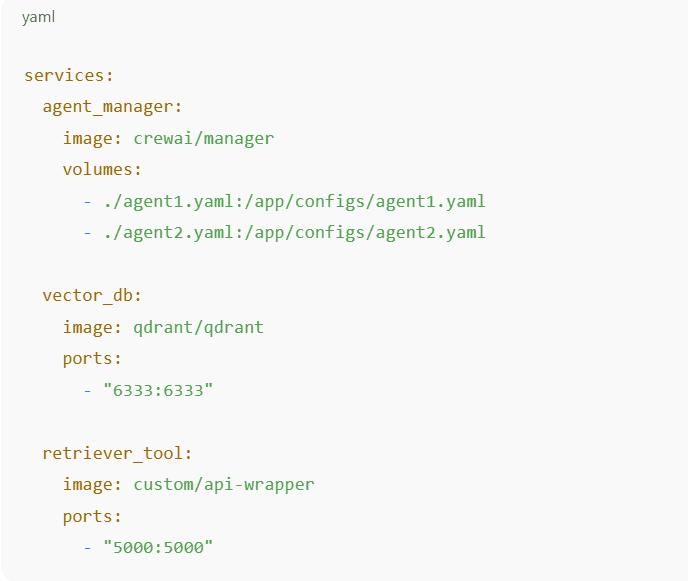

For the past decade, Docker Compose has made it easy for developers to define and run multi-container applications from the command-line. When you ran the “docker compose up” command, it would start all the containers defined in the “docker-compose.yml” file (e.g. one running a web server and another running a database) and wire them together automatically with shared networking, volumes, and configuration. Now, they can also use Docker Compose to define AI agents (e.g. a chatbot), models (e.g. GPT-4 or Llama 3), and tools (e.g. a vector database). So, a developer building a multi-agent helpdesk assistant might define a Compose file a little something like this:

Ultimately, this eliminates the pain of manually wiring together agents, models, and tools, while also negating the need for separate development, testing, and cloud deployment scripts.

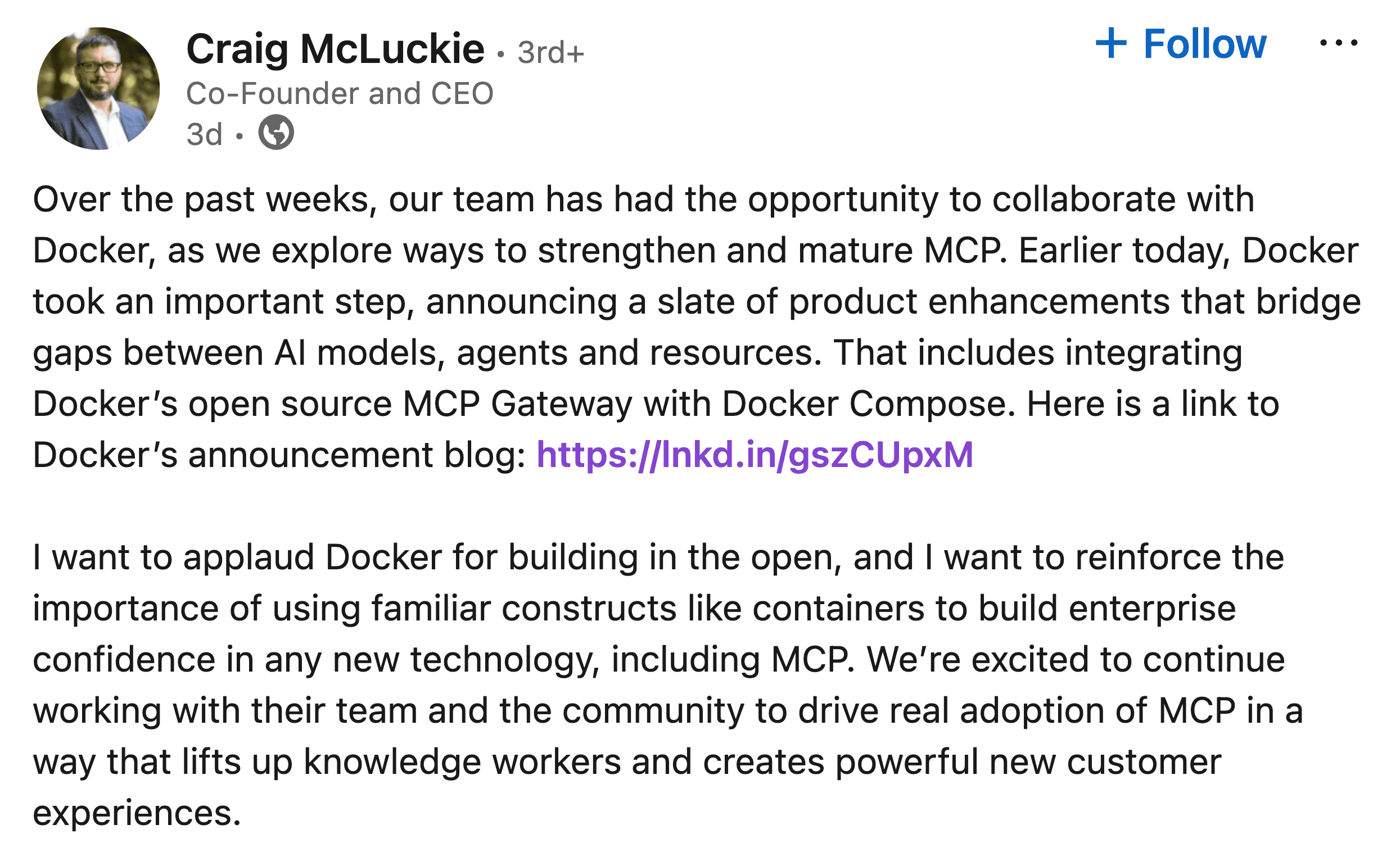

Central to this is Docker’s fresh implementation of the Model Context Protocol (MCP), the new standard for AI agents, models, and tools to discover and communicate with one another. Docker’s newly released MCP Gateway serves as an open source bridge between agents and the external tools they rely on, handling discovery, enforcing access controls, and routing messages.

Craig McLuckie, Kubernetes co-creator and CEO of a new MCP-focused company called Staklok, highlighted the importance of “building in the open,” while also leaning on existing technologies to ease businesses into this new world of agentic applications.

What’s more, these agentic applications can run locally on a developer’s machine, deployed to the cloud – such as Google Cloud Run (available now) or Azure Container Apps (coming soon) – or offloaded to cloud GPUs for heavier AI workloads.

This is actually related to another key part of Docker’s news from Berlin last week. The company announced new cloud partnerships and integrations with Google and Microsoft, as well as agent frameworks such as CrewAI, Embabel, Google’s ADK, LangGraph, Spring AI, and Vercel AI SDK.

In response to Google’s own announcement of the partnership, one person noted that this was a “huge step forward for simplifying cloud deployments,” comparing it with Heroku which became popular for its super simple deployment mode.

Arguably though, the more interesting element to the news – particularly for developers with hardware limitations – is a new capability dubbed Docker Offload. Available in beta now, Docker Offload lets developers run specific resource-intensive AI tasks on cloud GPUs from their local setup, meaning that they get the speed of working locally, with the muscle of the cloud when they need it.

Moreover, this also feeds into data sovereignty efforts, as developers can choose which cloud region they wish to send workloads to.

Docker Compose for AI agents is available now, while Docker Offload is in closed beta, with developers required to request access.

The integration with Google Cloud Run, meanwhile, is already live with Azure Container Apps support following shortly. Docker’s MCP Gateway is available now within Docker Desktop, or it can be deployed externally via the GitHub repository.