OpenAI last month announced that prompts are now API primitives, meaning developers can create, manage, share, and call them programmatically, just like they do with models.

In real terms, this means that developers can now centrally manage and organize their prompts in one place within their OpenAI workspace. But more than that, they can track prompt changes over time, run A/B tests, compare performance, and roll back updates just as they do with code.

With this change, prompts evolve from locally stored presets within Playground (OpenAI’s web interface for experimenting with prompts) to centralized, versioned API objects accessible from the broader OpenAI platform. This includes Evals, a tool for systematically testing model outputs – making it easier to run A/B tests and benchmarks on prompts, and Stored Completions, which helps teams review how specific prompts performed in practice.

This has big ramifications for collaboration, as teams can share and update prompts centrally, reducing friction and duplication. And it also improves maintenance efforts, given that prompts can now propagate smoothly across all integrations – no need to update copies of the same prompt across different locations.

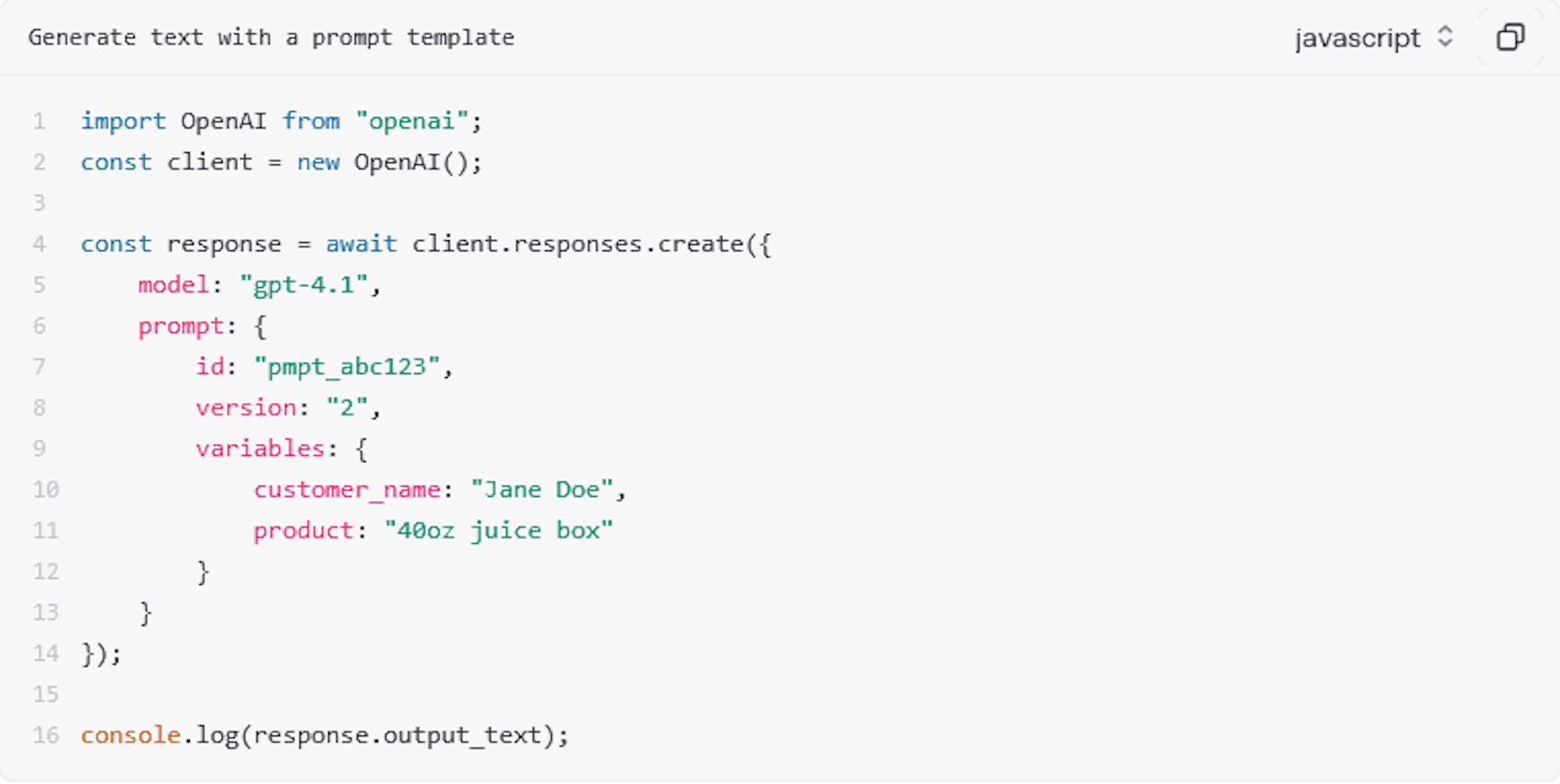

Developers create a reusable prompt that includes placeholders such as {{customer_name}}, with the dynamic content gleaned from a CRM or similar database. When calling the prompt via the API, developers use a prompt parameter object that includes three key properties:

The initial rollout was received warmly in general, with one person noting that treating prompts as core infrastructure allows AI agents to update their instructions on the fly without requiring a new code deployment.

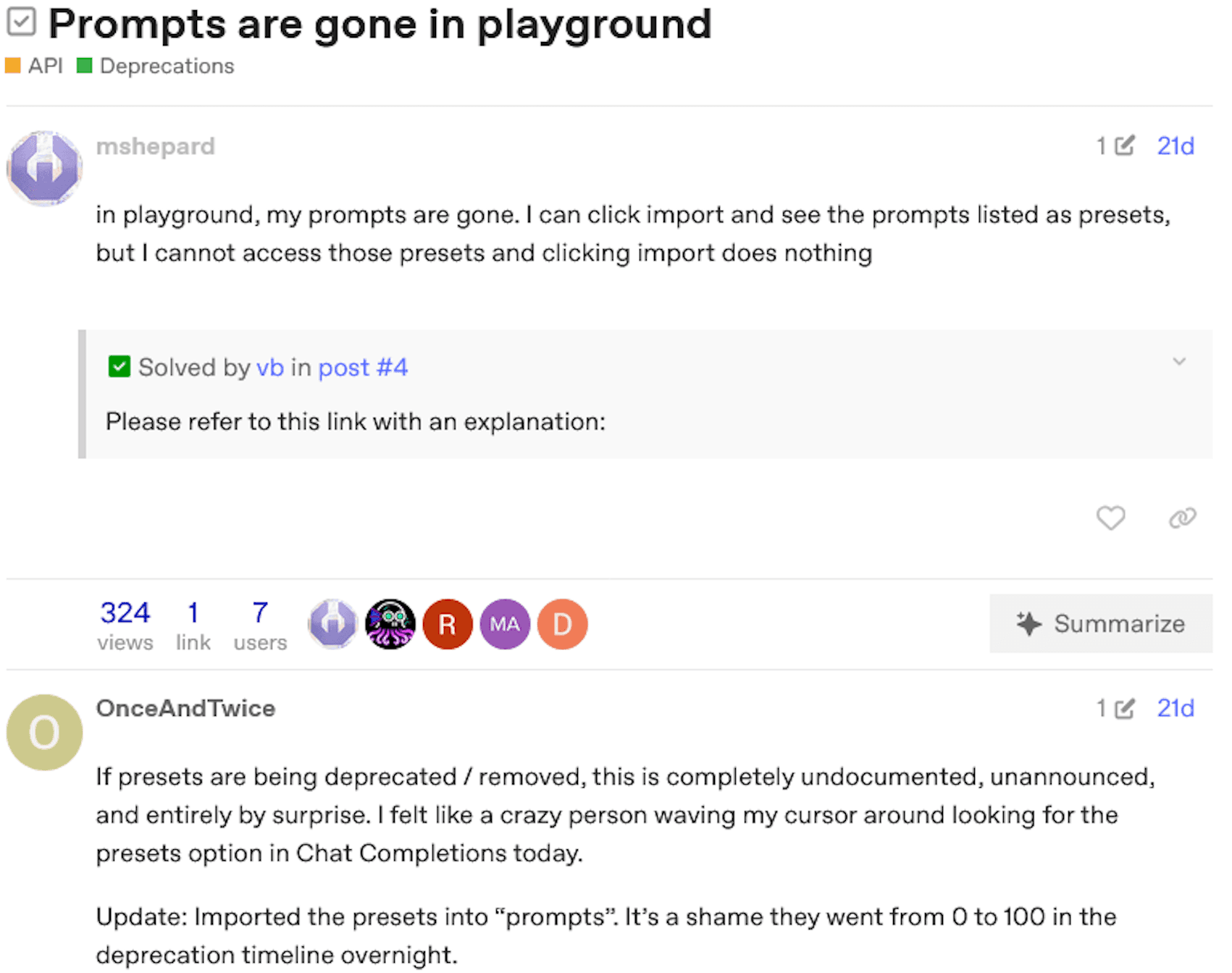

However, the rapid rollout created some confusion in the community, as there was no prior warning about the change. Many users reported that their prompts had disappeared from Playground, unaware that they had shifted to a centralized location in their OpenAI account.

OpenAI’s technical team reassured users that their prompt history was not lost, explaining that they can import their old Playground presets.

As useful as centralized, reusable prompts may be, debates continue over their strategic value in AI development. Shopify CEO Tobi Lutke has championed the idea of “context engineering” as a more effective approach than traditional “prompt engineering.”

Prompt engineering focuses on optimizing the query itself to get the LLM to produce the desired output. In contrast, context engineering centers on designing the environment around the query – controlling what information the model sees, how it’s presented, and when it’s delivered – to ensure the model performs reliably.

Esteemed AI researcher and engineer Andrej Karpathy echoes Lutke’s view, noting that most people associate prompts with short instructions for simple tasks. For industrial-grade LLM applications, however, “context” is far more critical — it’s the “...delicate art and science of filling the context window with just the right information for the next step.”

Karpathy explains, “I just think people's use of ‘prompt’ tends to (incorrectly) trivialize a rather complex component. You prompt an LLM to tell you why the sky is blue. But apps build contexts (meticulously) for LLMs to solve their custom tasks.”