9 Oct 20256 minute read

9 Oct 20256 minute read

A new command-line tool is setting out to tame the sprawl of AI coding assistants by turning shared rules and context into ready-to-use configs for each editor.

The AI coding assistant landscape is becoming somewhat fragmented. Each tool, be it Cursor, Copilot, or Claude Code, expects its own configs, conventions, and rules. That means teams either duplicate the same guidance across multiple formats, or risk inconsistency when switching tools and editors. The result is drift, wasted time, and assistants that behave unpredictably.

The recent arrival of AGENTS.md helped address at least some of that chaos: a simple, open standard where a single markdown file becomes the single source of truth for agent guidance. Instead of rewriting coding conventions, test commands, or architecture notes for every assistant, AGENTS.md provides one place to define them.

But AGENTS.md stops short of handling the translation problem – the gap between a shared spec, and the fragmented reality of editor/tool configs. Teams still need to maintain those local files, and conflicts inevitably creep in. That’s where Rulebook-AI enters the fray, taking a shared ruleset and memory bank, and automatically generating the right config files for each assistant a developer uses.

Or, as the Rulebook-AI creator themselves put it, they want to “elevate vibe coding to vibe engineering,” by codifying more free-form practices into structured guidance every assistant can follow.

The handiwork of developer Bo-ting Wang, Rulebook-AI is delivered as a command-line tool, available as an open source download on GitHub. Once installed, it gives developers a way to package coding rules, project context, and tools into a single definition.

This definition acts as the source of truth, which Rulebook-AI compiles into the specific config files required by different assistants.

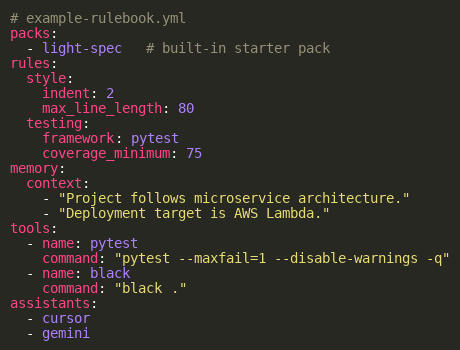

A typical workflow begins with defining a ruleset, which will usually be a structured file in YAML or JSON, where developers encode conventions such as coding style, testing practices, or project-specific shortcuts. With the ruleset in place, running a simple CLI command (e.g. rulebook-ai project sync) generates the corresponding configs for each assistant, such as .cursor/rules for Cursor or [object Object] for Gemini. These outputs can then be dropped directly into the relevant editor environments, ensuring consistency without the manual overhead of reconciling formats by hand.

This ruleset might specify a project’s language and style conventions, testing framework, and a few core rules for code quality. Rulebook-AI also supports the idea of Packs, which are prebuilt collections of rules and tools that serve as a starting point. One of the built-in Packs is light-spec, which lays down a lightweight set of defaults. Developers can load light-spec and then extend it with their own project-specific guidance.

Running the CLI with this ruleset creates a .rulebook-ai directory for state, and generates assistant-specific files such as .cursor/rules for Cursor and GEMINI.md for Gemini.

Moreover, because Rulebook-AI is open source, teams can audit the code, adapt it to their own environments, or contribute new integrations for emerging assistants.

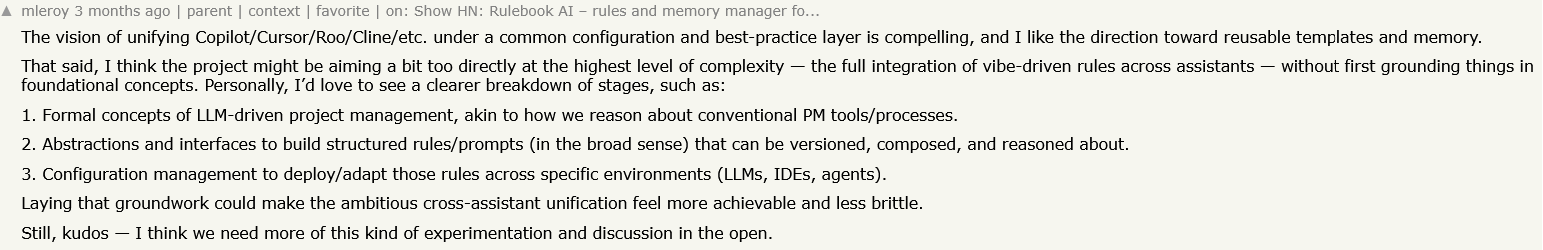

It’s still early days for Rulebook-AI, and there is only a trickle of community reaction so far. However, one Hacker News commenter described the project’s goals as “compelling,” adding that they “like the direction toward reusable templates and memory.”

At the same time, they cautioned that the project might be reaching too far, too fast. More specifically, they felt that Rulebook-AI is trying to unify the more free-form, “vibe-driven” style of coding across assistants, before laying the necessary conceptual groundwork and intermediate steps

“I think the project might be aiming a bit too directly at the highest level of complexity — the full integration of vibe-driven rules across assistants — without first grounding things in foundational concepts,” they wrote.

Whether Rulebook-AI gains traction remains to be seen, but its goal is clear: to move from the chaos of vibe coding toward something more structured, reusable, and consistent across environments.

And the emergence of such new standards and tools highlights the growing push to bring order to an unruly AI coding ecosystem.