29 Sept 202510 minute read

29 Sept 202510 minute read

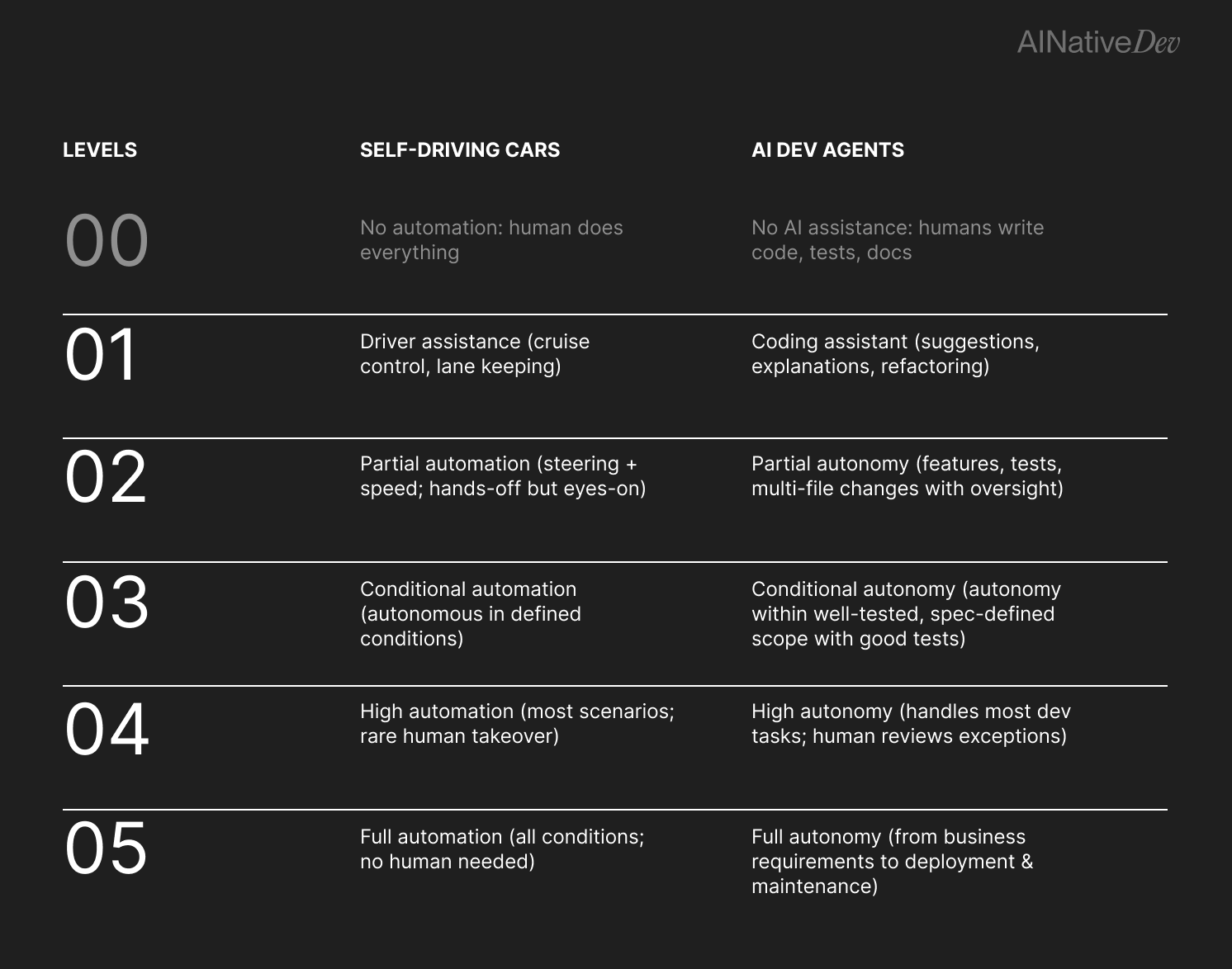

How autonomous can AI agents become? We're keen to access their power, but afraid of their unpredictability. Fortunately, it's not the first time we've faced this problem. Self-driving cars have been grappling with autonomy for a while, and the industry developed a model to describe the different levels.

The autonomy spectrum runs from Level 1 (mostly manual, with some assistance) through Level 5 (full autonomy), giving car makers clear milestones while helping everyone understand where the industry stands.

This framework also works surprisingly well for AI agents, with “Level 0” representing the pre-AI era, and Level 5 being complete automation.

Level 0 is the pre self-driving baseline, where the human does everything. In software development, it’s the same story: the pre-AI era where humans write all the code, tests, and documentation themselves. There’s nothing wrong with Level 0 — but once you’ve experienced higher levels of automation, going back feels unnecessarily difficult.

Level 1 self-driving provides driver assistance features - adaptive cruise control or lane-keeping assist. The car can help with one thing at a time, but the driver remains fully engaged and responsible.

In the agentic development world, Level 1 is GitHub Copilot suggesting your next line, ChatGPT explaining a complex algorithm, or Claude helping you refactor a function. The AI assists with discrete tasks, but you approve every step. You’re still the driver.

The key to success in Level 1 is helping the AI understand the code and your intent, which is why clear docs, comments, and specs can help make its suggestions more useful. Check out the Spec Registry if you need a way to get specs to agents.

Level 1 is where most developers are today, and the rule is simple: the AI suggests, you decide. Like adaptive cruise control on a long drive, Level 1 AI agentic coding makes the journey less taxing, without fundamentally changing who's in control.

At this level, self-driving can simultaneously control steering and speed. The car can handle highway driving or park itself, but the driver must stay alert and ready to take over. It's "hands-off but eyes-on."

Level 2 AI agents are everywhere now - Claude Code, Cursor's Composer, OpenAI’s Codex, Devin, etc. These tools can complete features, write comprehensive test suites, or implement complex changes across multiple files. They understand context, make many implementation decisions and produce complete, runnable code from natural language descriptions.

But here's the catch: the constant supervision is exhausting. You describe what you want, the agent produces a solution that almost works (but not quite), and you still need to review every change, catch subtle bugs, and verify that requirements were properly understood. The bigger the task the agent completes, the more you need to review, and the harder it is to understand what the agent did and bring it back on track.

The value of Level 2 is still compelling - you're reviewing and refining rather than writing from scratch. Some developers even engage in "vibe-coding" - accepting agent output based on intuition and trusting tests to catch issues. It's faster, but risky.

An emerging pattern to make Level 2 work better is to go spec-driven - capturing and reviewing requirements, tests, and architecture before a change is made. Define boundaries and expectations up front so the review stays manageable. The Tessl Framework is one way to do this.

Level 3 self-driving represents a major threshold. In specific conditions - say, highway driving in good weather - the car is fully autonomous. The human becomes a passenger ready to take control if required, but isn't actively involved in routine operation.

For agentic development, Level 3 means autonomous operation within well-defined boundaries. A software module with comprehensive tests is a natural candidate: the agent can refactor, optimize, or even re-implement as long as all tests pass. Another example is refactoring code to meet new style guidelines, or updating a framework.

The key enabler for Level 3 is clear specs with comprehensive validation. This shifts from “spec-driven” to “spec-centric”: the source-of-truth is captured well enough that the agent can be let loose on the code.

This is where we start to see real productivity multipliers. Developers focus on defining what needs to be built - the specs, the tests, the acceptance criteria - while AI handles implementation details. It’s like working with a compiler, but at a higher level of abstraction.

Level 4 self-driving cars can handle most driving situations autonomously. Waymo robotaxis are already operating in several cities, navigating complex intersections and dealing with unexpected obstacles. Human intervention is only needed in exceptional circumstances - think severe weather or construction zones. Importantly, the car can safely pull over if it encounters a situation it can't handle.

Level 4 agentic development can handle most software development tasks autonomously within defined parameters. Agents understand architectural patterns, maintain consistency across codebases, make appropriate technology choices, and even identify when they need human input. The human role shifts dramatically - from implementing to specifying, from coding to reviewing exceptions.

Level 4 is also about scale: instead of just some modules being spec-centric, most of the software is, with agents handling the majority of implementation across the codebase.

We're not there yet, but the building blocks are emerging. When AI agents can maintain long-term context, preserve architecture, generate code that passes test suites, and know their limits, that will mark Level 4. This level demands not just better AI, but better ways of specifying software.

Level 5 self-driving means complete automation - no steering wheel, no pedals, no human intervention ever. The car handles everything, in all conditions, everywhere. Level 5 agentic development would be the same.

Like a Level 5 car with no steering wheel, a Level 5 development environment might not even expose the code. You specify business-level requirements, and the agent handles everything else - architecture, implementation, testing, deployment, monitoring, and maintenance.

At this point it isn’t just about building software faster — it’s the automation of software engineering as a discipline. Agents understand business goals, make architectural decisions, optimize for cost and performance, manage compliance, and update their own specs as needs evolve. It may sound like science fiction, but this is what full autonomy looks like.

We don't need to reach Level 5 for AI agents to transform software engineering. Each level brings value, and different scenarios call for different autonomy. Some code will always benefit from human creativity and judgment. But for routine, well-understood tasks – CRUD operations, API integrations, test writing - higher levels of autonomy free developers to focus on novel problems and real value.

The best teams aren’t waiting for Level 5. They’re investing in better specs, comprehensive testing, and clearer architecture, and learning when to use Level 1 assistance versus Level 3 autonomy.

The self-driving car industry taught us autonomy isn't binary - it's a spectrum. The same applies to agentic development. Understanding these levels helps teams choose the right tools, structure work, and build the right skills.

Most of us are at Level 2 today, occasionally reaching Level 3. That’s fine, each level has its place. What matters is knowing where you are, and building the habits for what’s next.

Ultimately, the journey to full autonomy isn’t about flipping a switch. It’s momentum that builds, with each level mastered accelerating the next, compounding until what might seem experimental today becomes established practice.