12 Jun 20256 minute read

12 Jun 20256 minute read

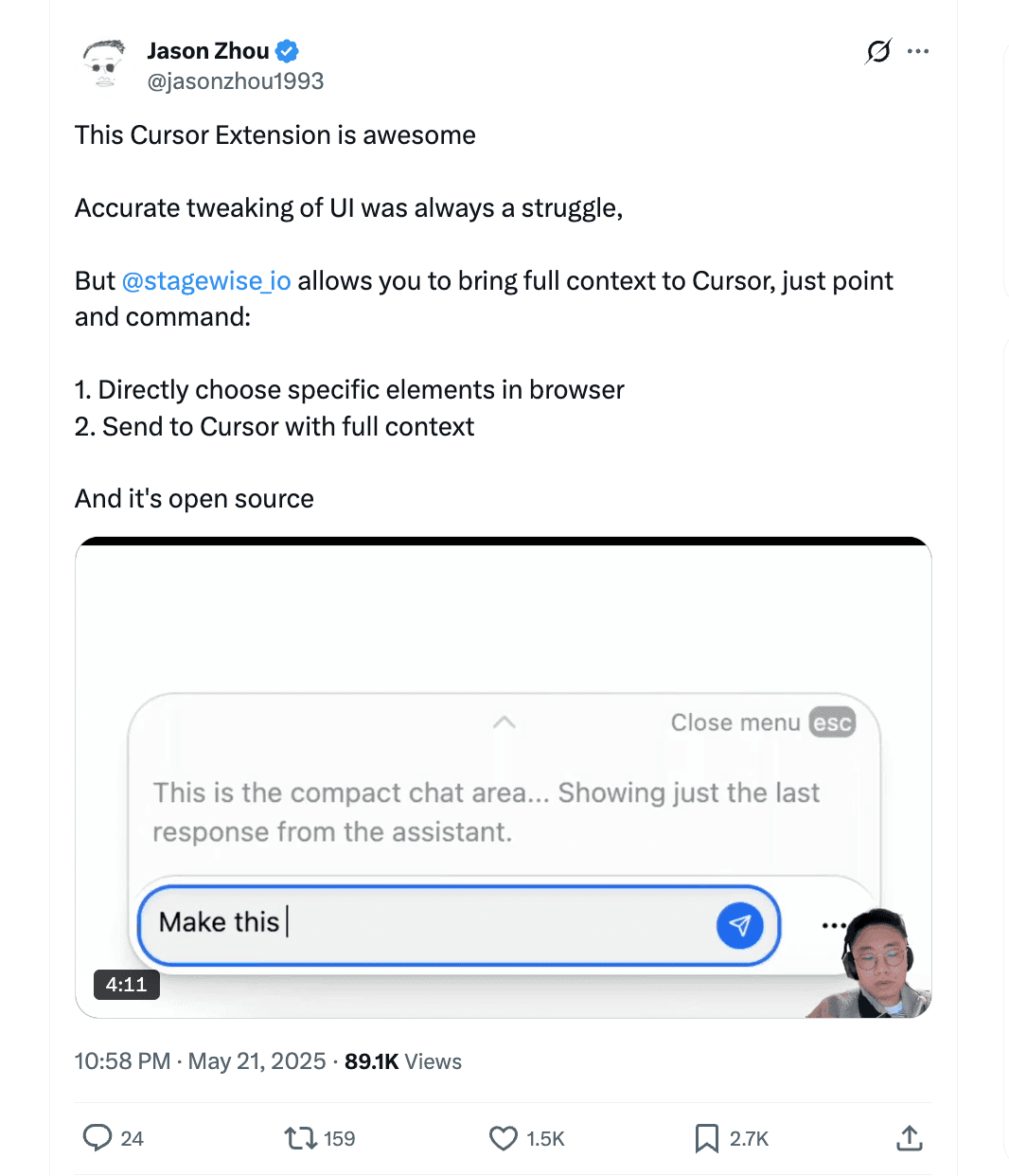

Released under an AGPL-3.0 license, Stagewise is an open-source VS Code extension and toolbar for precise UI prompting, introducing a novel way to build frontend with AI assistance. The tool connects a live web application’s front-end to AI coding agents, allowing developers to click on any UI element in their browser and edit it using natural-language prompts.

In practice, Stagewise overlays a toolbar on your app in development mode and feeds the selected DOM element (along with context like screenshots and metadata) to your IDE. In other words, where it’s hard to specify things in a prompt which element, with this you can point and prompt together.

The project support covers popular frameworks (React, Next.js, Vue, Nuxt.js) and is compatible with popular AI coding assistants Cursor, GitHub Copilot and Windsurf (Cline appears to have issues, which should be solved soon). Devs can get started with the new tool by adding it into their VS code extensions - quickstart instructions here.

Stagewise’s release has generated significant buzz among developers on forums and social media, with reactions ranging from excitement to healthy skepticism. Many developers are impressed by the productivity boost in UI development.

The implication is that Stagewise brings the convenience of Vercel’s v0 (which has a dedicated UI builder) into the Cursor/VS Code world, and it appears to surpass since you can work live from your IDE. Developers who enjoy being early adopters of AI tools have been sharing success stories of quickly restyling components or swapping UI elements via a single prompt, owing to the live DOM context Stagewise provides.

On the flip side, since the project is its infancy, users report rough edges. In the announcement threads, a few tried Stagewise and found it didn’t work seamlessly in their setup. While Stagewise supports many mainstream frameworks out of the box, there may be limitations with less typical front-end setups.

There’s a consensus Stagewise addresses a real pain point. We’ve seen remarks from devs that they usually just describe UI elements in natural language when prompting Copilo,t and it “works well most of the time.” That said, as project complexity grows, prompts can become “really lengthy”. For example, specifying “the element in position X that does Y” the AI might misunderstand and make broad, unintended changes.

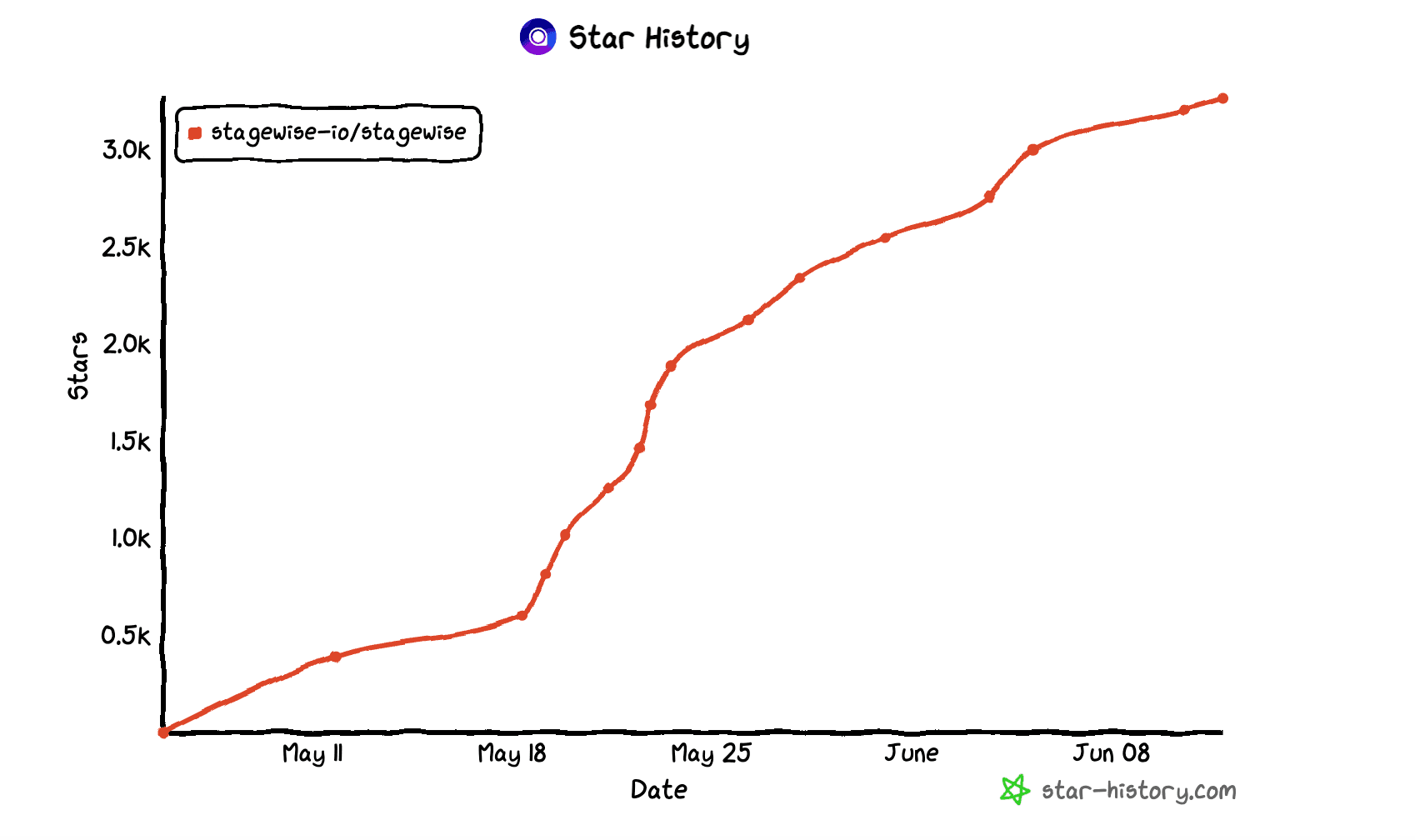

The rapid adoption metrics and word-of-mouth provide additional validation. The project’s GitHub repo amassed over 3k stars within a couple of weeks, and Stagewise’s site claims it’s already “embraced by engineers” at several big-name companies. While we should take such claims with a grain of salt (early interest doesn’t always equal long-term use), it’s clear Stagewise tapped into a demand.

The arrival of Stagewise feels like a parallel to the rise of WYSIWYG editors. Rather than painstakingly dragging components into place, you gesture and describe your intent in natural language. The AI doesn’t just drop components where you say, it composes them contextually, adapting design and code on the fly.

The process of building software is fundamentally shifting, with devs focusing on expressing their intent, and the AI partner executes the implementation, and allows for discovery. Stagewise gives a glimpse of that AI Native future: coding by feel and feedback rather than just by keystrokes, all while keeping the developer in the driver’s seat.

It’s conceivable that larger platforms will build Stagewise's offering for themselves. An IDE like Cursor might bake in a similar UI-inspection feature natively, as they feel pressure to offer comparable visual integration to stay competitive. One limitation is that if the app’s structure is complex or not DOM-centric, the current approach falters.

I suggest trying Stagewise on a small project as it can give a sense of how precision UI-coding could fit in your developer-to-agent workflow. You’ll likely hit roadblocks as the project is nascent (there’s still a couple of bugs to iron out), but it sheds light on the new avenues within the AI Native development space.

Stagewise may also risk taking developers further away from the code itself. That added abstraction can open the door to more careless practices if not used deliberately. And that’s not a hypothetical concern: the term “vibe coding” itself has been critiqued as a bit tainted. In some corners, it’s become shorthand for developers who blindly accept AI changes.

It’s essential to maintain good software hygiene: treat AI outputs as draft code, write tests whenever possible, and ensure you thoroughly understand the changes being made. The goal isn’t to surrender coding to an AI, but to offload the repetitive parts so you can focus on your intent.