7 Nov 202512 minute read

7 Nov 202512 minute read

In software engineering, AI is either a big productivity-booster, or a chaotic compromise on quality – depending on who you ask.

However, a new report suggests both sides in that debate could be right.

In AI-assisted engineering: Q4 impact report, the first in a new quarterly series from developer analytics firm DX, the findings indicate that while the vast majority of developers now use AI coding tools and report saving several hours a week, the gains come with mixed results for code maintainability and reliability.

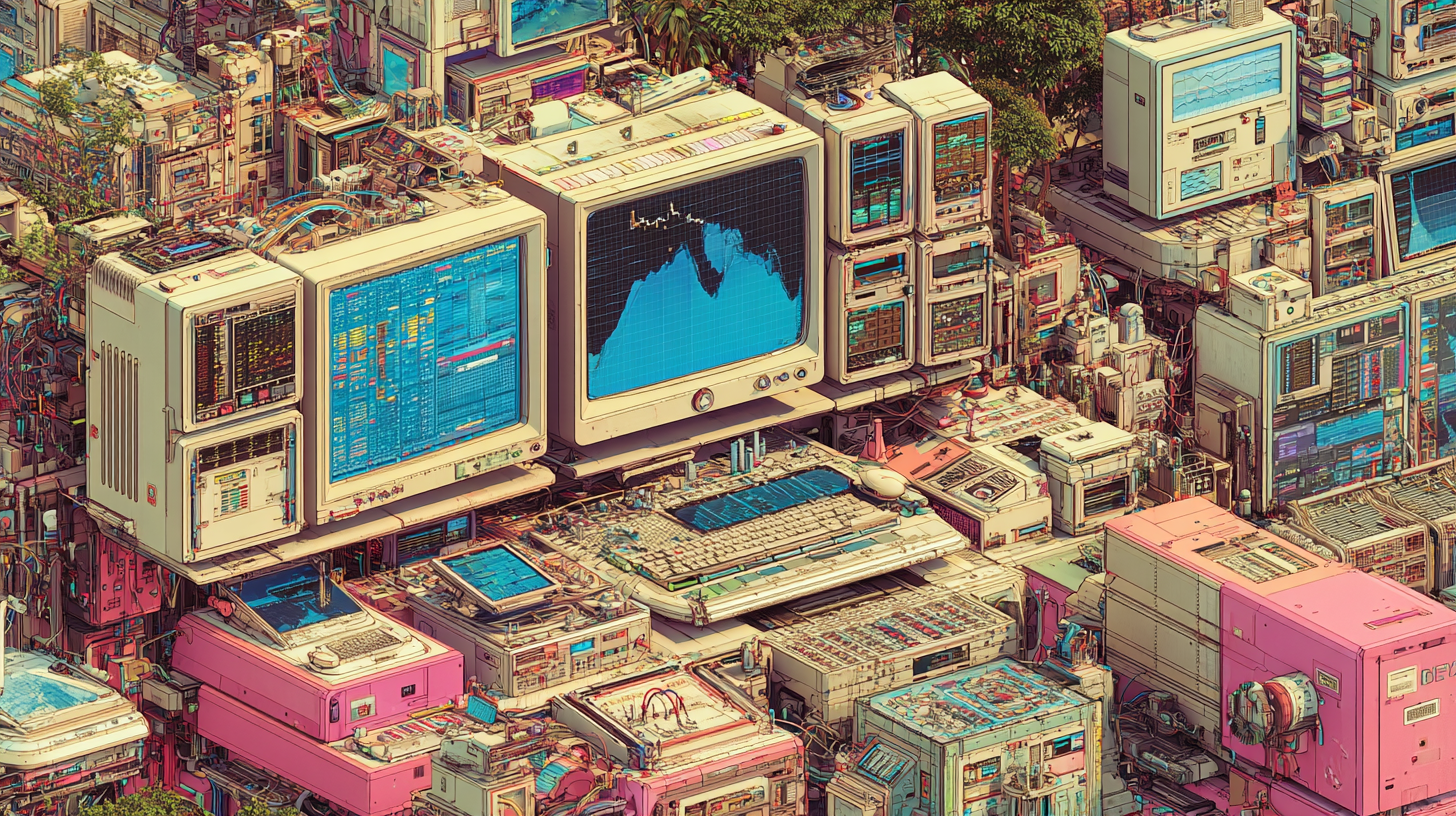

AI coding assistants have become a core part of the modern engineering toolkit, and the DX report backs this up. The overarching study draws on data from 135,000 developers across 435 companies, though its key AI adoption findings are based on system-level usage data from 85,350 developers whose activity with tools such as GitHub Copilot, Cursor and Claude Code could be directly measured. Across that group, industry-wide adoption now sits at just over 90% — a figure broadly consistent with other analyses, including Google’s recent State of AI-assisted Software Development Report, which also found adoption rates of around 90%.

But that headline number doesn’t tell the full story, according to DX CTO and report author Laura Tacho. “Adoption doesn’t equal impact,” Tacho writes. “Companies are struggling more than ever to understand how their AI investments are impacting their engineering performance.”

And yet, beneath that uncertainty, the data shows that AI is saving humans at least some time.

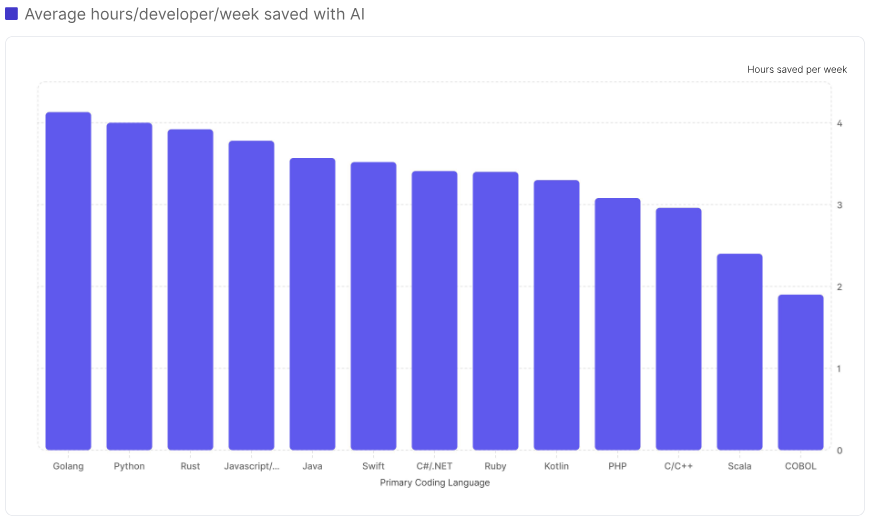

Developers who regularly use AI tools say they save an average of 3.6 hours per week, with daily users reporting up to 4.1 hours saved. Weekly users report slightly less at 3.5 hours, and monthly users closer to 3 hours. These time savings have nearly doubled since Q4 2024, when the average hovered around two hours.

It’s worth noting that these figures are based on self-reported survey data gathered from 58,330 developers across 352 companies in DX’s network.

How this all translates into real-world code, well, that’s where perception and reality start to diverge.

Anyone monitoring some of the flashier headlines of late will have seen tech leaders touting some impressive progress. Coinbase CEO Brian Armstrong, for instance, recently said that about 40 percent of the company’s new code is now AI-generated, adding that his goal is to push that above 50 percent. Robinhood’s CEO Vlad Tenev, meanwhile, said that around half the company’s new code is already AI-generated.

Other figures bandied around from notable companies are a little more sedate. Back in April, Alphabet CEO Sundar Pichai said that more than 30% of its code is now created with the help of AI, while Microsoft chief Satya Nadella pegged its figure at 20-30%.

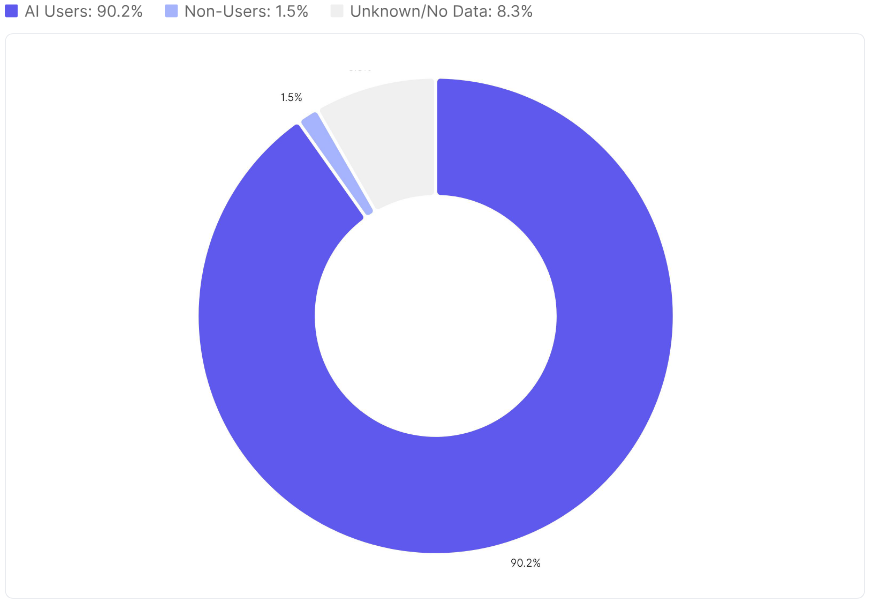

The self-reported data in the DX study points to a figure at the lower end of that spectrum. Across a sample of more than 34,000 developers from across 248 companies, just 22 percent of merged code qualified as “AI-authored” — defined as code generated by AI and merged without major human rewrites or modifications. Even among daily users of AI coding assistants, the share rises only slightly, to 24 percent, with monthly users reporting just over 20 percent.

That contrast between big-company proclamations, and what developers actually report, captures one of the central tensions in the AI coding debate — the gap between marketing narrative, and measurable impact. DX frames its findings not as a ceiling on AI’s potential, but as a baseline for how the technology is actually being used today.

“The number provides an important reality check against vendor marketing claims,” Tacho writes. “It also highlights a key point: while AI-generated code does not tell the full story of impact, it can be a meaningful signal when tracked alongside other data points.”

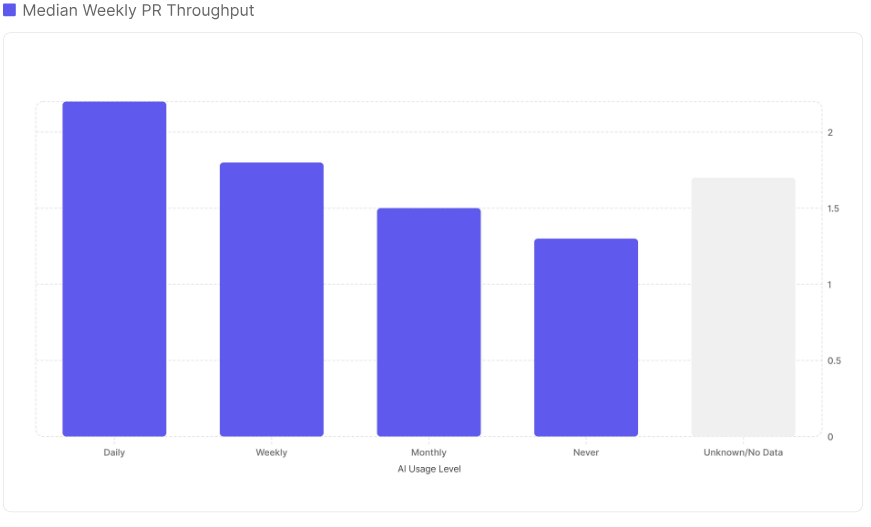

Such data points include the relationship between AI usage and developer throughput — specifically, the correlation between how often engineers use AI and how many pull requests they ship. Looking across more than 51,000 developers, DX found that daily AI users merge a median of 2.3 pull requests per week, compared with 1.8 for weekly users, 1.5 for monthly users, and 1.4 among those who don’t use AI at all.

In other words, teams that rely more heavily on AI are shipping roughly 60% more code than those who use it sparingly — a pattern that has held steady over consecutive quarters.

But frequency, throughput, and deployment velocity mean little if the output isn’t stable or reliable over time.

AI may be helping some developers write more code, faster. However, the DX data suggests that productivity and adoption tell only part of the story. When the company compared AI usage with quality indicators such as Change Failure Rate, Change Confidence, and Code Maintainability, the results were inconsistent: some organisations improved as AI use increased, while others saw reliability and maintainability decline.

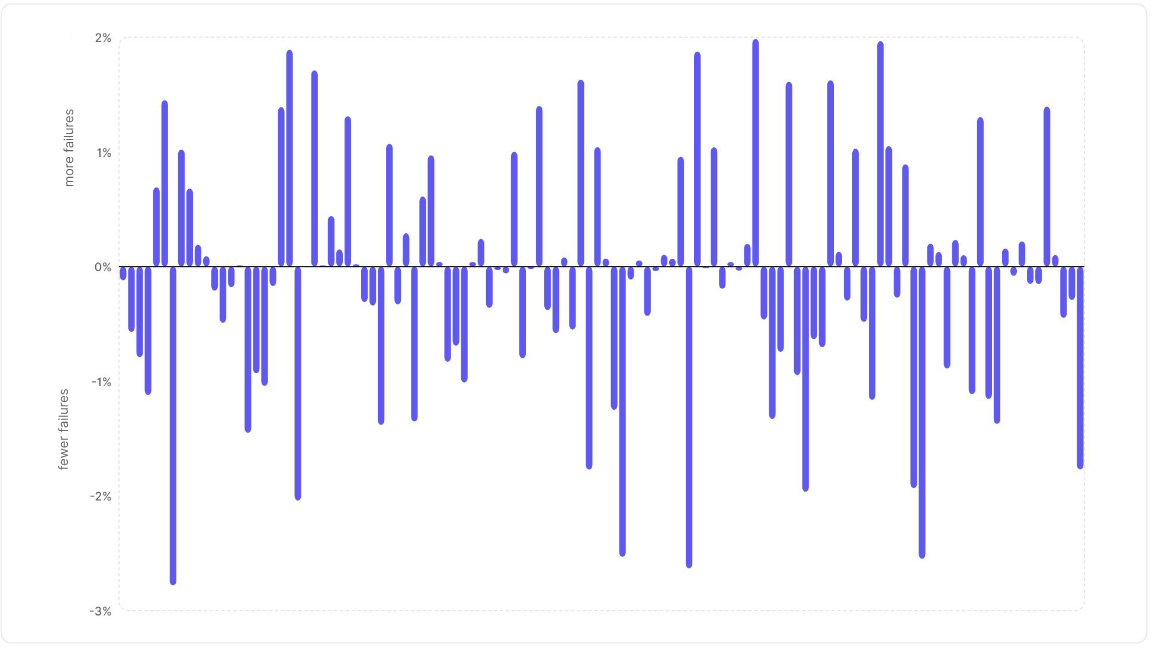

In DX’s data, Change Failure Rate (the percentage of changes that cause degraded performance requiring an immediate fix) produced no clear pattern. The chart shows roughly as many organisations improving as worsening, with most changes falling within a narrow ±1% band. The effect size, in other words, is small: AI adoption sometimes reduces breakages, sometimes increases them, but rarely by much.

Overall, the report describes quality outcomes as mixed rather than uniform across its sample.

“There is no ‘average’ experience with AI impact,” Tacho wrote in a blog post accompanying the report. “Some are doing very well when it comes to throughput, but quality is suffering; some have done a great job of using AI for migrations, but are struggling to incorporate AI in testing and release processes.”

The report attributes this divergence to factors like training quality, tooling maturity, and codebase complexity. “Successful AI integration requires more than just deploying the tool,” it notes. “It demands thoughtful measurement, implementation strategies, and proper training to ensure that velocity gains don’t come at the expense of software sustainability.”

DX’s data shows that while AI use is nearly universal, how developers use it — and what they get out of it — varies by role and seniority. Junior developers use AI tools more frequently than their senior counterparts, while senior engineers — particularly those at Staff+ levels — report the largest potential time savings, up to 4.4 hours per week. The report suggests this represents an opportunity for higher-leverage productivity gains if senior engineers adopt AI tools more consistently.

One of the more unexpected findings is that large, traditional enterprises are now leading on measurable AI impact. “Engineers at large organisations report saving more time with AI tools than those at startups or smaller companies,” said Tacho.

Structured training and governance programs, the report suggests, may be yielding better results than the looser, more experimental approaches typical in tech firms.

At the same time, DX warns of a growing “shadow AI” problem, as developers use personal AI tools outside company oversight, a trend that raises compliance and data security risks. This actually aligns with findings from a just-released report by security firm Cycode, which found that every company surveyed now has AI-generated code in production, yet 81% of security teams lack visibility into where and how it’s being used, underscoring the scale of the governance challenge ahead.

Elsewhere, the DX report highlights a number of other notable trends related to AI software engineering. For instance, AI delivers the biggest time savings in modern programming languages like Python, Java, and Go, where abundant public code data gives models a stronger foundation.

AI’s impact is also evident in onboarding. Across a sample of 14,000 developers, those who used AI daily reached their 10th merged pull request in just 49 days, compared to 91 days for those who didn’t.

DX further notes that “structured enablement” – formal rollout programs, training, and guidance – strongly correlates with better outcomes across maintainability, confidence, and speed. “Simply handing out licenses is not enough,” the report states.

Finally, another interesting tidbit to emerge from the DX study is that AI’s reach is extending beyond traditional developers. Nearly two-thirds of product managers and designers at large enterprises use AI tools in their daily work, according to the report, blurring the boundaries of who “writes code.” This broadening participation is reshaping how software gets built, with product and design teams now able to prototype, test, and iterate with less engineering support.

But that democratization comes with new challenges. As more non-engineers begin contributing code, DX warns that existing safeguards may not be enough to ensure quality and security across the board.

“This expanding definition of developer also highlights the need for quality standards and review processes,” the report notes. “Code review and testing processes need to deal with AI-generated code and contributors with varying technical depth, while maintaining security and reliability standards.”

Looking ahead, the report’s findings highlight that the challenge isn’t about AI adoption, but using it responsibly and effectively at scale. Tacho frames this phase as a shift from widespread experimentation to measurable, mature impact — where the focus is less on adoption rates and more on outcomes.

“Earlier in the year, many leaders focused their AI strategies on adoption and activation, and it’s paid off,” Tacho wrote. “But now that adoption has ramped up, the focus needs to shift toward driving real impact.”

You can read the report in its entirety by visiting this link.